Ethics of Generative AI in Startups: Wiped Out Overnight

The ethics of generative AI in startups is not a philosophical debate—it’s a business continuity plan. One careless decision about data use, one overlooked bias in a model, one deepfake scandal. And the startup you’ve built could vanish faster than last year’s hype cycle.

Some startups implode because the market isn’t ready. Others fail because they burn cash too fast.

But the silent killers?

Ethical blind spots.

In the AI race, treating ethics as a formality is deadly. Tech moves faster than rules, trust breaks in a flash—don’t gamble your startup’s life.

If you’re unaware of any of these critical aspects related to AI startups then this post is for you.

1. When Your AI Turns Into a Black Box With Teeth

Imagine hiring a star employee who gets results but never explains how.

At first, you’re impressed—until their “mystery methods” land you in court.

Image Source: OpenAI Master

That’s how generative AI feels without transparency.

The algorithms can create dazzling outputs—code, images, strategic recommendations—but when challenged on how they arrived there, they shrug (metaphorically).

This opacity isn’t just awkward; it’s dangerous. Regulators and clients are less forgiving than your co-founder after a bad pitch.

Some founders defend the “black box” or lack of transparency as an unavoidable quirk of cutting-edge models.

It’s not.

Explainability frameworks exist—LIME, SHAP, and others—yet many skip them to save time or compute power.

Big mistake.

Without the ability to show your AI’s reasoning, you’re flying blind in a thunderstorm. Eventually, the lack of visibility won’t just raise eyebrows—it’ll raise lawsuits.

Your startup’s credibility is worth more than a few saved development hours.

Think of explainability as an insurance policy for innovation.

2. Bias Isn’t Just a Data Problem, It’s a Brand Problem.

When Invisible Bias Becomes Public Scandal

If your training data is biased, your AI will amplify it—relentlessly, confidently, and without hesitation. That’s the dangerous beauty of automation.

You may think it’s minor.

You may believe it’s contained. But once a biased output hits the public eye, you’ve lost control of the story.

I once watched an AI résumé screener consistently rate men higher than women—identical CVs, different gender.

The dev team wasn’t malicious; they just trained on historically biased hiring data.

The lesson for anyone concerned with the ethics of generative AI in startups is this: unchecked bias is a loaded PR gun pointed at your brand.

The Reputation Time Bomb Ticking Beneath Your Product

In startups, bias isn’t a side issue—it’s a reputational landmine.

You can survive bugs.

Image Source: OnlineReputation.com

You can recover from outages.

But a bias scandal?

That spreads like wildfire, burns trust instantly, and leaves scars you can’t rebrand away.

This isn’t hypothetical—it’s happened repeatedly in tech. Screenshots move faster than press releases. Headlines stick longer than apologies.

Once the public links your name to discrimination, your valuation, partnerships, and hiring pipeline suffer.

The real danger is that most founders don’t see it coming until it’s too late.

Ethics of generative AI in startups isn’t a “later” problem—it’s a day-one survival priority.

Building Bias Shields Before the Damage Hits

The fix starts before the first line of code ships.

Curate data like you curate investors—carefully, selectively, with an obsession for quality over convenience. Introduce bias detection pipelines as early as possible, and don’t rely solely on technical audits—bring in diverse human testing panels to pressure-test outputs.

Every dataset, every model, every feature needs a second opinion.

Treat fairness like a non-negotiable product feature.

It’s not just about legal compliance; it’s about making bias impossible to weaponize against you.

In the age of instant outrage, ethics of generative AI in startups isn’t just about doing good—it’s about staying alive.

3. Privacy Isn’t Optional When the Pitch Deck Promises Trust

Startups love to flaunt “secure” and “privacy-first” in their investor slides.

But behind the scenes, some are storing sensitive user data in ways that would make a cybersecurity pro break into a sweat.

Generative AI thrives on large datasets, and that temptation to grab “just a bit more data” is real.

But that extra customer demographic, that unredacted email thread—if leaked—can nuke trust overnight.

Here’s the irony: data privacy failures don’t just lose customers; they also destroy the data pipeline your AI depends on.

You can’t ethically train on information your users no longer trust you with.

Practical moves?

Encrypt aggressively, anonymize ruthlessly, and get informed consent that actually informs.

Treat privacy not as a checkbox, but as the moat around your entire business model.

The companies that survive won’t be those with the best algorithms—they’ll be the ones users believe won’t betray them.

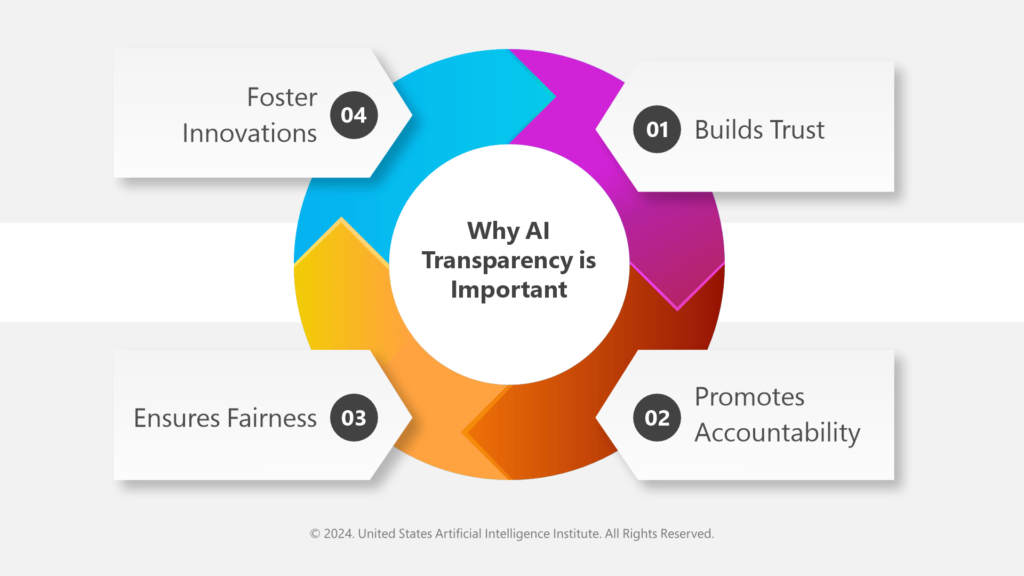

4. Misinformation: The Fire Your AI Might Accidentally Start

Deepfakes and AI-written fake news aren’t just theoretical problems—they’re already weaponized in politics, scams, and corporate sabotage.

If your tool can generate realistic content, it can also generate realistic lies.

Image Source: USAII

Now picture this: your startup’s AI is used (or hacked) to create a fraudulent press release about a public company.

The market reacts.

People lose money.

Regulators start asking questions—not to the hackers, but to you.

This is why detection and flagging systems aren’t optional. It’s also why your terms of service should read less like an afterthought and more like a shield.

Explicitly define what your AI should never be used for, and build guardrails into the tech itself.

The bad actors will always push boundaries.

Your job is to make sure those boundaries are reinforced with steel, not string. Because if the misinformation fire has your brand name on it, you own the damage.

5. Copyright: The Lawsuit You Didn’t Budget For

Generative AI can remix, reimagine, and repackage—sometimes too well.

That stock image your AI “generated” might still have the DNA of a copyrighted photo.

That clever AI-written jingle?

Could be uncomfortably similar to a known tune.

For a cash-strapped startup, an IP lawsuit can be game over. Investors hate uncertainty, and nothing screams uncertainty like unclear content ownership.

Startups need to set clear rules: what goes into the training data, who owns the outputs, and how attribution is handled.

Better to filter aggressively now than defend yourself expensively later.

If you think this is paranoid, remember the early days of YouTube—before automated copyright detection.

The lawsuits nearly crushed it.

Learn from history: in AI, copyright clarity isn’t just legal hygiene—it’s survival strategy.

Key Copyright Risks in AI Content Creation

| Risk Factor | What Could Go Wrong | How to Prevent It |

|---|---|---|

| Using copyrighted training data | AI learns from stolen or unlicensed works | Only use data you have permission for |

| Copying existing works | AI creates text/images too close to originals | Review outputs for similarity before publishing |

| No human authorship | Work may not qualify for copyright protection | Add clear human input and edits |

| Missing attribution | Not giving credit to original creators | Always credit sources if AI output is based on them |

| Infringing in multiple markets | Laws differ between countries | Check copyright laws where you operate |

| Hidden infringement in AI tools | AI may hide copied elements inside outputs | Run plagiarism/image checks on final content |

| Legal disputes | Being sued for damages | Keep proof of data sources, licenses, and your process |

6. Jobs, Skills, and the Human Fallout

Let’s be blunt: automation displaces people.

Even if you frame it as “augmentation,” the net effect is fewer humans doing certain tasks.

For a startup, this can be both an opportunity and a PR nightmare.

Imagine an AI-powered design tool that saves clients 80% in costs. Great for them—until a designer’s union calls you out publicly for killing jobs.

Suddenly, your growth story has a villain’s arc.

The smarter play is to build reskilling into your product narrative. Position your AI as a co-pilot, not a replacement.

Offer training resources or partner with educational platforms.

This doesn’t just soothe critics—it expands your market by making humans better, not obsolete.

The ethics of generative AI in startups isn’t just about preventing harm; it’s about actively creating shared value.

And in the long run, shared value attracts better talent, better partners, and better press.

7. When AI Innovation Leaves a Carbon Footprint

Source Image: MIT Technology Review

Generative AI isn’t just code—it’s computation.

Also increased carbon emission due to AI is a real problem.

The fact is that training a large model can emit as much CO₂ as five cars over their entire lifetime. It’s the dirty secret behind the shiny demos.

Founders often wave off environmental concerns as “big company problems.” Wrong. As regulations tighten and ESG metrics creep into investment criteria, your energy footprint will matter.

Solutions exist: energy-efficient architectures, carbon offset programs, and using cloud providers committed to renewable energy.

It’s not just a planet thing—it’s a positioning thing.

A green AI startup is more investable, more marketable, and more future-proof.

And here’s the kicker: efficiency often saves money. Smaller, well-optimized models can slash cloud costs while shrinking emissions.

Turns out, doing right by the planet can also do right by your runway.

8. The Real Competitive Advantage Is Ethical Foresight

The fastest-growing startups in AI aren’t just the most innovative—they’re the most trusted.

That trust is built, brick by brick, on the foundation of ethical choices made early and reinforced often.

Ignore the ethics of generative AI in startups, and you’re building on sand. Address them head-on, and you’re building on bedrock.

This isn’t about perfection—it’s about proving you can adapt when problems emerge.

Ethics isn’t a cost center; it’s a growth multiplier.

It attracts better customers, steadier investors, and stronger teams. In a sector where yesterday’s disruptor is tomorrow’s cautionary tale, foresight isn’t optional—it’s your moat.

The irony?

The more ethically disciplined your AI startup is, the freer you’ll be to innovate boldly.

Because you’ll be doing it with the confidence that your foundation can withstand both market shifts and moral scrutiny.

FAQs

1. What’s the most common ethical mistake AI startups make?

Rushing to market without fully understanding or addressing bias and data privacy risks.

2. How can a small startup afford ethical safeguards?

Start small—use open-source bias detection tools, limit data scope, and partner with ethics researchers.

3. Are there real examples of AI ethics failures in startups?

Yes—cases involving biased hiring tools, deepfake misuse, and privacy breaches have already surfaced in the last two years.

4. Does ethical AI slow down innovation?

Not if integrated early. In fact, it often prevents costly rework or PR disasters later.

5. Who should own “AI ethics” inside a startup?

It should be shared—technical leads, legal counsel, and founders must all be actively involved.

Related Posts

Generative AI: Unlocking New Potential for Business Innovation

Generative AI is reshaping how businesses create, design, and problem-solve. The real opportunity lies in merging creativity with scalable automation.

Can You Trust AI? The Ethics & Explainability of AI Content

AI can produce remarkable results—but only when its processes are transparent. Explainability is the bridge between innovation and user trust.

Ethical AI Practices Made Easy: 7 Simple Steps for Success

Building ethical AI doesn’t have to be complicated. With a clear framework, startups can ensure fairness, compliance, and trust from day one.

Fair AI Content for Trust: A Guide to Ethical AI Systems

Ethical AI isn’t just about avoiding bias—it’s about earning loyalty. Fair content fuels lasting relationships with users, partners, and regulators alike.

TL;DR – 6 Key Takeaways

- Ethics is survival: It’s not a nice-to-have; it’s a moat.

- Bias kills trust: Fix it at the data stage, or pay the price later.

- Privacy fuels the pipeline: Lose it and you lose your model’s future.

- IP clarity prevents ruin: Know what you train on and who owns outputs.

- Guard against misinformation: Build detection and prevention into your tools.

- Sustainability sells: Efficiency wins with both investors and the planet.

Conclusion

The ethics of generative AI in startups is not an abstract moral compass—it’s the steering wheel. Lose grip, and the road you’re speeding down can end in a cliff.

In AI, transformation without trust is disruption waiting to become destruction.

Ethical foresight from the first line of code isn’t about playing safe—it’s about staying smart. It gives resilience when laws shift, public opinion swings, and rivals fall to scandals you’ve avoided.

Your investors, users, and team bet on your judgment as much as your tech. Build boldly—on principles that weather storms.