Survive or Vanish: Multimodal AI Applications in Media

Multimodal AI applications in media are no longer a futuristic concept—they are here, reshaping the very foundation of how content is created, distributed, and consumed.

Technological change is accelerating faster than ever, placing the media industry at a historic crossroads.

AI is no longer limited to simple tasks like tagging or transcription.

It can now watch, listen, and analyze content much like a human—only faster, cheaper, and at scale.

The core idea is clear: multimodal AI is the next evolution in media. Companies that embrace it will gain agility and creativity, while those that resist risk irrelevance. This article explores its meaning, impact, and how to stay ahead.

Let’s break it down section by section.

What is Multimodal AI?

Think of multimodal AI as giving artificial intelligence multiple senses.

Instead of only processing one type of information—like text, images, or sound—it can combine and interpret all of them together. This ability makes it far more powerful and flexible than traditional AI systems.

Here’s a breakdown of modalities:

- Text: AI understands and generates written language, from summaries to creative scripts.

- Audio: It processes speech, sound effects, and even complex musical compositions.

- Visual: It analyzes images, video, and live camera feeds to extract meaning.

Want a concrete example?

Imagine an AI system watching a movie.

It “sees” the characters on screen, “hears” their dialogue, and then generates a scene summary or a set of captions—all automatically.

Or picture a system that takes a simple text prompt like “a boy playing guitar on a beach at sunset” and produces a full animated short film complete with background music.

Contrast this with older systems: a chatbot that only understood typed words, or a filter that could only enhance a photo.

Those were powerful but limited. Multimodal AI applications in media combine these abilities, enabling a leap forward in creativity and productivity.

Automating the Production Pipeline

Is the traditional content pipeline about to collapse?

Not exactly, but it is being reinvented.

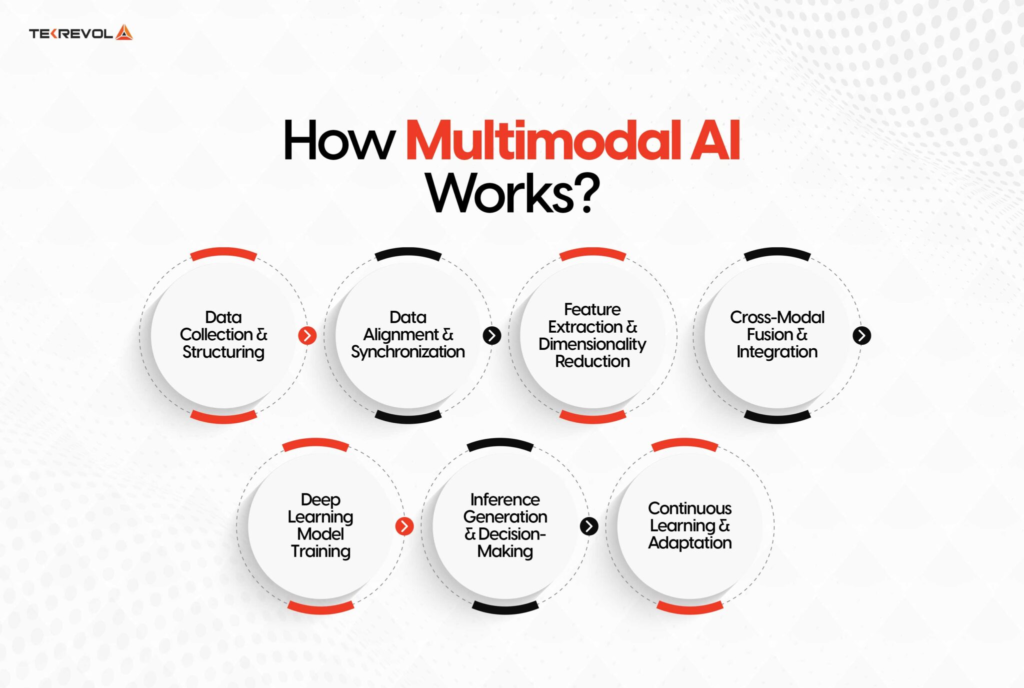

Source : Tekrevol.com

The heart of the media industry—turning ideas into finished products—has always been resource-intensive.

Hours of transcription, editing, logging footage, and creating drafts are required before the audience even sees a frame. Multimodal AI can now automate many of these steps.

For instance, long interviews can be transcribed and summarized automatically, cutting work hours drastically.

AI can generate placeholder visuals for scripts, allowing teams to storyboard ideas in minutes instead of days.

Platforms like Runway already enable creators to convert text into short video clips, reducing costs and speeding up workflows.

But here’s the key: this doesn’t replace humans.

It frees them. Instead of spending weeks preparing raw material, producers and writers can focus on refining stories, polishing scripts, and innovating visually.

The grunt work moves to AI, while humans elevate the creative vision.

In other words: from script to screen, faster than ever.

Real-World Examples of Multimodal AI in Media

| Example | How It Works | Impact in Media |

|---|---|---|

| Movie Summarization | AI “watches” video, processes dialogue, and generates concise scene summaries or captions automatically. | Cuts manual logging time, improves accessibility, and enables faster search across archives. |

| Automated Storyboarding | From a text prompt, AI generates placeholder visuals or animations for scripts. | Speeds up pre-production by turning ideas into visual drafts within minutes. |

| Interview Processing | Long interviews are transcribed, summarized, and tagged by AI combining audio and text analysis. | Saves hours of manual transcription, enabling journalists to focus on story insights. |

| Creative Video Generation | Text prompts like “boy playing guitar on a beach” become animated clips with sound and visuals. | Expands creative possibilities, reduces costs, and allows rapid prototyping of new content. |

| Captioning & Accessibility | AI analyzes video (visual) and dialogue (audio) to create real-time captions and translations. | Improves accessibility for global audiences and meets compliance requirements. |

| Music & Sound Design | AI blends text prompts with audio generation to create music or soundscapes for scenes. | Provides affordable, customizable soundtracks, enhancing storytelling without high production costs. |

The Era of Dynamic Content

Static content is ending. Dynamic, AI-driven content is beginning.

Virtual hosts are already being used by companies like Synthesia, where AI avatars deliver news or training sessions without the need for physical studios.

In entertainment, digital actors can perform scenes without human presence, reducing production costs while opening up new creative directions.

Advertising is even more transformative.

Imagine watching a car commercial where the model shown matches the one you recently searched for online.

Or a beverage ad that adapts visuals and voiceovers based on your region and preferred language—generated in real time.

That’s not science fiction; it’s personalization at scale.

This level of hyper-personalization allows brands to reach you directly, not just an audience segment. It’s exciting, but it also raises questions.

How much is too much when ads know more about you than you realize?

Revolutionizing the User’s Search

How do you find what to watch in a sea of endless content? That’s another problem multimodal AI is solving.

Today, search is mostly keyword-based. Tomorrow, you’ll simply describe what you want across multiple modalities.

Source: Yatter.in

For example: “Find me a sci-fi movie with a strong female lead, neon visuals, and a soundtrack like Blade Runner.”

Multimodal AI can analyze not only text descriptions but also visual styles, audio tracks, and narrative themes to surface exactly the right match.

Voice-driven search will also expand. Imagine asking your smart speaker or VR headset for a movie where the music feels uplifting and the villain is sympathetic.

With multimodal AI, this becomes possible.

Platforms like Netflix and Disney+ are already investing heavily in this technology to make discovery more intuitive and engaging. And for you as a viewer, it means less scrolling, more enjoying.

Immersive and Interactive Storytelling

Is watching a movie enough anymore? Increasingly, audiences want to participate.

Multimodal AI is driving interactive narratives where users influence the story’s direction.

For example, you might watch a mystery drama where your spoken questions shape how characters respond.

Or step into a VR world where your gestures and voice guide the plot.

Games and interactive films are already pushing boundaries, but AI makes it possible to scale this immersion across all forms of media.

Imagine an open-world video game where non-player characters (NPCs) respond intelligently to your tone of voice, or where landscapes adapt dynamically to your emotional reactions.

The result?

Living, breathing worlds that are as much yours as the creator’s. These aren’t just stories—they’re experiences. And that’s a huge leap beyond passive consumption.

The Accessibility Revolution

One of the most inspiring aspects of multimodal AI applications in media is accessibility.

Content that was once difficult or impossible to consume for certain audiences is now within reach.

AI can generate real-time captions for live broadcasts, making them more inclusive for the hearing-impaired.

It can also describe visual changes in real time for the visually impaired, narrating key moments in sports, movies, or even theater.

Translation is another frontier.

AI tools can instantly translate dialogue and subtitles across multiple languages, making global distribution seamless. For example, YouTube already uses AI to generate captions and translations, allowing creators to reach international audiences with minimal effort.

The dream of a truly borderless media experience is now closer than ever.

And with accessibility comes new audiences, new voices, and new opportunities.

Ethical and Social Crossroads

With great power comes great risk.

Multimodal AI applications in media can generate astonishing results—but they can also create chaos. Deepfakes are already spreading misinformation, with AI-generated audio and video becoming indistinguishable from reality.

That’s a serious risk for news organizations, politics, and social trust.

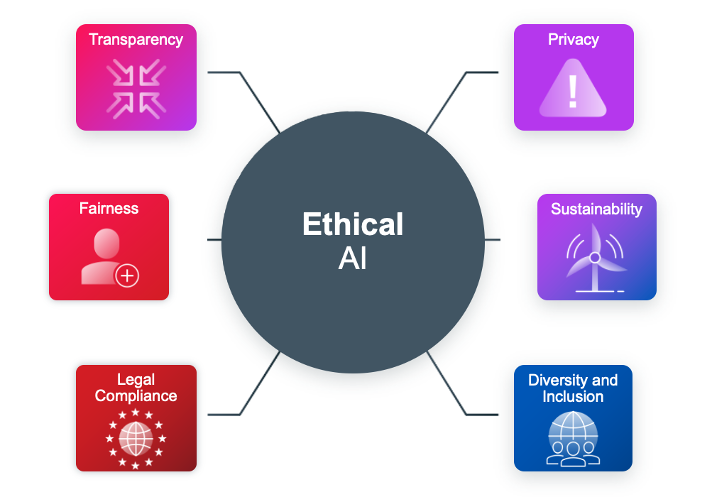

Source: Veritas

AI Bias is another problem.

If AI is trained on biased data, its outputs—recommendations, character portrayals, or even news coverage—may reinforce stereotypes or marginalize communities.

Then comes the workforce question.

What happens to editors, transcribers, and even actors when AI takes on their roles?

Job displacement is a genuine concern, and industries must plan for retraining and new skill development.

Finally, copyright remains murky.

If AI generates a movie trailer, who owns it—the creator, the AI platform, or both? Until regulations catch up, this gray area will persist.

As Brookings highlights, the challenge is balancing innovation with accountability.

Without ethical frameworks, the risks could outweigh the rewards.

The Path Forward and The Human Element

So, what’s next? The choice is stark: adapt or disappear.

The future of media is not about asking if AI will transform the industry, but how.

Survival requires embracing multimodal AI applications in media while ensuring that human creativity remains at the core.

Here are some survival strategies:

- Invest in AI tools that integrate seamlessly with existing workflows.

- Train teams to work alongside AI rather than against it.

- Build ethical guidelines to ensure transparency, fairness, and accountability.

- Focus on uniquely human skills—empathy, vision, and storytelling—that AI cannot replicate.

The reality is simple: AI can amplify your reach, but only human imagination gives it direction.

This is the great paradox of our time. We face a future where media is more powerful, personalized, and immersive than ever—but also more fraught with risk. Those who find the balance will lead. Those who don’t may vanish.

FAQs

What are multimodal AI applications in media?

They are AI systems that can process and generate multiple types of data—text, audio, and visuals—simultaneously, allowing more advanced media production and personalization.

How is multimodal AI changing video production?

It automates tasks like transcription, storyboarding, and visual generation, drastically reducing production time and costs while allowing creators to focus on higher-level storytelling.

Are deepfakes a real threat in media?

Yes. Deepfakes can spread misinformation quickly and erode trust. That’s why ethical use, regulation, and detection tools are critical alongside AI adoption.

Will AI replace human creativity?

Not entirely. AI can handle repetitive or technical tasks, but true creativity, empathy, and cultural nuance remain uniquely human strengths.

How does multimodal AI improve accessibility?

It generates real-time captions, provides audio descriptions for the visually impaired, and offers instant translations, making content more inclusive and globally available.

Related Posts

Last Chance to Learn Generative AI Before You’re Left Behind!

AI Use Cases in Project Management: Survive or Be Replaced

Course: AI for Everyone – Non-Techies, Time’s Running Out!

LLM AI Advancements in 2025: How It’s Changing AI Forever

Conclusion

Multimodal AI applications in media are reshaping the industry with breathtaking speed. From automating production pipelines to enabling hyper-personalized content, immersive storytelling, and global accessibility, the transformation is already underway.

But alongside opportunity comes risk: misinformation, bias, and job displacement loom large.

The path forward is not about rejecting AI but mastering it. Media companies that invest in the technology while preserving the human touch will thrive. Those that cling to old models may not survive.

The future is clear: embrace the fusion of human creativity with machine intelligence—or vanish in the noise.