What AI Can Do for You, and Where It Fails

What artificial intelligence can do for you goes far beyond simple automation, but only if you understand its real capabilities and limits.

Have you ever wondered why some AI tools feel magical while others disappoint?

The difference lies in knowing where AI excels and where human judgment remains essential.

In this article, you will explore practical uses, common pitfalls about AI.

You’ll also find strategies for leveraging AI without losing control. From narrow repetitive tasks to maintaining oversight, each section provides actionable insights.

The aim is to help you use AI deliberately, efficiently, and with confidence.

Why Most People Misunderstand AI

Most people see AI as human-like because it speaks confidently and writes fluently. Headlines and demos exaggerate its abilities, shaping false expectations. In reality, AI predicts patterns; it does not think or understand like humans.

If you feel confused about AI, you are not alone.

Most people approach AI with assumptions shaped by headlines, demos, and secondhand opinions rather than lived experience.

As a result, expectations often drift far from reality.

AI appears powerful, confident, and articulate, so it is easy to assume it understands the world the way you do.

It does not.

That misunderstanding sits at the root of most frustration, disappointment, and misuse you see today. There are certain human competencies that AI simply cannot replace.

So where does the confusion begin? Usually with how AI is framed, explained, and casually compared to human intelligence.

AI Is Not Human Intelligence

AI does not think, reason, or understand in the human sense. It identifies patterns in massive amounts of data and predicts what comes next.

That is all.

When AI writes a paragraph or answers a question, it is not drawing from awareness or intent. It is calculating probability.

This distinction matters more than it sounds. When you assume intelligence, you expect judgment. When you expect judgment, you trust outputs too quickly. That is where problems begin.

AI feels smart, but feeling smart is not the same as being wise.

Researchers regularly stress this point.

Large language models do not know facts, they approximate them. Once you internalize that, your expectations shift naturally. You stop asking AI to think and start asking it to assist.

How Marketing and Media Create False Expectations

The second source of confusion is visibility bias.

Source: SmartDev

You mostly see AI at its best. Polished demos. Perfect outputs. Carefully curated use cases.

What you rarely see are the failed prompts, the hallucinations, or the constant human correction behind the scenes.

According to multiple industry reports, a large percent of enterprise AI outputs still requires human review before use.

That statistic rarely makes headlines. What gets attention is what looks magical, not what works consistently.

Over time, this creates a distorted picture of what AI can realistically deliver on its own.

Why Vague Prompts Lead to Poor Results

Finally, many misunderstandings come from how people interact with AI.

Vague instructions produce vague outcomes. Yet users often blame the tool rather than the input.

AI performs best when tasks are narrow, goals are clear, and constraints are defined. When you ask what artificial intelligence can do for you in general terms, you invite generic answers.

Specificity changes everything. Clear prompts. Clear scope. Clear expectations.

That is not a limitation. It is a boundary. And boundaries are what make AI genuinely useful.

Understanding limits is the first step toward using AI well.

What AI Can Do for You

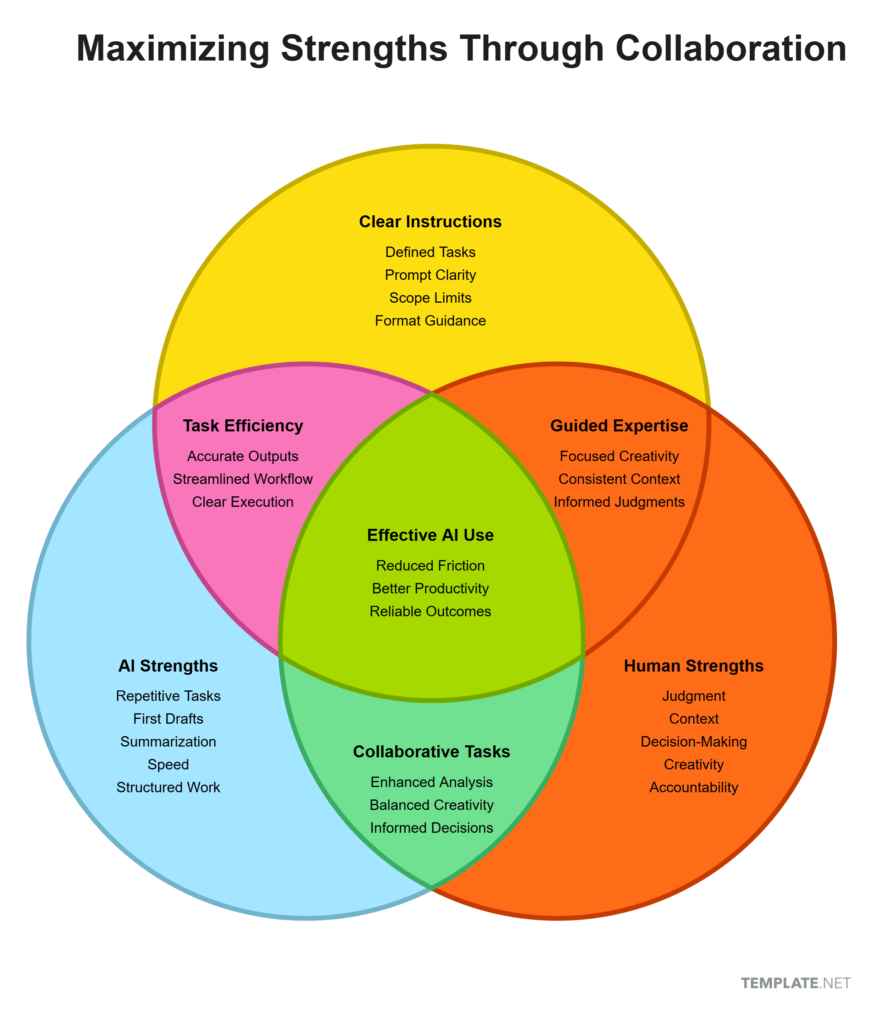

AI works best on repetitive, structured tasks that drain time and energy. It speeds up first drafts and summarizes information efficiently. Clear instructions ensure useful outputs while you remain in control of decisions.

Once you strip away the hype, AI becomes far more practical and far less mysterious.

You stop asking it to replace judgment and start using it to reduce friction.

That shift alone changes outcomes. Instead of feeling overwhelmed, you begin to see clear, repeatable value in specific situations.

So what does that look like in practice?

Let us break it down.

Handling Narrow and Repetitive Tasks Efficiently

AI performs best when the task is narrow, structured, and repetitive.

Think of activities that drain time but do not require deep thinking.

Formatting documents. Summarizing long text. Categorizing information. Creating variations of similar content.

In these cases, AI behaves like a tireless assistant. It does not get bored. It does not slow down.

According to productivity studies cited by firms like McKinsey, “about 60 percent of jobs have at least 30 percent of their activities that can technically be automated with currently demonstrated technologies“.

That is not theoretical. That is observable.

The key is not complexity, but clarity.

When the task is well defined, AI delivers consistent results that free you to focus elsewhere. Less grind. More attention where it matters.

Speeding Up First Drafts and Basic Research

AI is especially useful at the beginning of the thinking process.

Not the end.

It can help you generate a first draft, outline an article, or summarize existing information quickly. This matters because starting is often the hardest part.

Instead of staring at a blank page, you work with raw material. You edit. You refine. You decide.

AI handles the momentum, while you retain control.

Research works the same way. AI can scan, condense, and explain surface level information across domains such as artificial intelligence, digital marketing, or workplace productivity.

It gives you orientation, not authority. That distinction protects you from overreliance.

Working Best With Clear Instructions and Constraints

Here is the rule many people miss. AI output quality is directly tied to input quality. Vague prompts produce vague answers.

Clear instructions create usable results.

When you define role, context, format, and limits, AI responds predictably.

This is where understanding what artificial intelligence can do for you becomes operational rather than abstract.

Specific tasks. Specific outcomes. Clear boundaries.

In AI terms, this is prompt engineering. In human terms, it is simply good communication.

Ask better questions. Get better help. Simple, but powerful.

AI rewards precision, not imagination.

You need to remember that AI is eventually your assistant, not master. If you treat AI as a tool rather than a thinker, it becomes reliable, scalable, and genuinely helpful.

Where AI Fails Consistently

AI looks confident. That confidence often misleads. When systems speak fluently and quickly, it is natural to assume competence across situations.

But this is exactly where many users get caught out.

AI performs well in constrained environments, yet struggles the moment tasks require judgment, nuance, or lived understanding.

Why does this matter?

Because knowing what artificial intelligence can do for you is incomplete without understanding where it predictably breaks down.

Lack of True Understanding and Judgment

AI does not understand meaning. It recognizes patterns.

That difference is subtle but critical.

When you ask a question involving ethics, priorities, or trade-offs, AI cannot weigh consequences the way a human can.

In fields like healthcare, law, or finance, this limitation becomes obvious. Industry studies repeatedly show that AI systems require human oversight precisely because they lack situational judgment.

They do not know what matters, only what resembles past data.

You may get an answer that sounds reasonable. It might even be well structured. Yet it lacks intent, values, and accountability. Those remain human responsibilities.

Confident Errors and Hallucinated Information

One of the most discussed AI weaknesses is hallucination.

This happens when AI generates information that sounds plausible but is factually incorrect. The danger lies in presentation. AI rarely signals uncertainty clearly.

Research from organizations like Stanford and OpenAI confirms that large language models can fabricate sources, statistics, and explanations under ambiguous conditions.

Fluency masks uncertainty. That is why blind trust is risky.

If you do not verify outputs, small errors can propagate quickly, especially in professional or public-facing work.

Inability to Handle Ambiguity and Context

Ambiguity is where human experience shines and AI struggles. Sarcasm. Cultural nuance. Emotional subtext. Unspoken assumptions.

These elements shape real communication, yet they remain difficult for machines.

When context shifts, AI often misses it. It cannot read the room. It cannot infer unstated intent reliably.

As a result, responses may feel off, inappropriate, or incomplete.

This is why AI works best with structure and fails with fuzziness. Clear inputs reduce risk. Vague situations amplify error. Knowing this boundary protects you from overconfidence and disappointment.

AI is powerful, but it is not perceptive.

The Cost of Over-trusting AI

Over-trusting AI lets small errors multiply quickly and creates hidden risks in decisions and communication. Reliance reduces critical thinking and weakens your skills over time. Polished outputs can mislead, so always review before acting.

AI makes work feel easier. Faster. Cleaner.

That convenience is precisely why over trust creeps in quietly.

When tools respond instantly and confidently, you begin to lower your guard. You skim instead of review. You accept instead of question.

Over time, that habit creates risks that are not obvious until something goes wrong.

So what is the real cost when trust replaces judgment?

How Small Errors Scale Into Big Problems

AI errors rarely announce themselves. They start small. A misinterpreted fact. A flawed assumption. A missing nuance. On their own, these seem harmless.

Yet once AI-generated content is reused, shared, or automated, those mistakes multiply.

In organizational settings, studies on automation bias show that people are significantly more likely to accept incorrect machine suggestions than human ones.

Confidence accelerates error propagation.

When AI output feeds reports, decisions, or customer communication, the impact compounds quickly.

This is why human review is not optional. It is a safeguard. Small checks prevent large downstream failures.

Skill Atrophy and Loss of Independent Thinking

Another hidden cost is cognitive erosion.

When AI handles tasks you once performed, your skills slowly weaken. Writing becomes harder. Research becomes shallower.

Problem-solving shortcuts replace reasoning.

This is not hypothetical. Behavioral research consistently shows that reliance on automated systems reduces critical thinking engagement over time.

Convenience trades quietly against competence.

If you stop practicing core skills, you lose confidence in your own judgment. Ironically, that makes you depend even more on AI, creating a feedback loop that is difficult to reverse.

False Confidence From Polished Outputs

AI outputs look professional. Well structured. Grammatically clean. That polish creates a false sense of reliability. You feel reassured before you have verified anything.

But polish is not accuracy. Clarity is not correctness. AI excels at presentation, not truth validation. When you forget that distinction, you confuse surface quality with substance.

Understanding what artificial intelligence can do for you includes recognizing this illusion. The better AI sounds, the more carefully you should review its work.

Trust should rise with verification, not fluency.

AI should support your thinking, not replace it.

Where AI Fits Best in Real Work and Life

You hear sweeping claims about AI replacing jobs or running entire businesses.

Reality is calmer, and more useful. In day to day work and life, AI earns its place when it supports you, not when it replaces your judgment.

Think of it as a practical layer of assistance that reduces friction, speeds up routine tasks, and helps you focus on what actually requires thinking.

So where does that line sit?

AI as an Assistant Rather Than a Decision Maker

Should AI decide for you? Not really.

AI works best as a decision support system, not a final authority.

Modern generative AI tools and large language models are excellent at summarizing options, spotting patterns, and presenting alternatives you might miss.

They are not good at understanding context the way you do, especially emotional, ethical, or situational nuance.

For example, AI can outline pros and cons of a business move or draft a policy memo in minutes. But deciding whether that move aligns with your values, timing, or risk tolerance is still your job.

That final call belongs to human judgment, not an algorithm trained on averages.

Reliable Use Cases Where AI Adds Real Value

Where does AI shine consistently?

Start with information-heavy tasks.

Research synthesis, meeting summaries, document drafting, spreadsheet analysis, and customer support triage are proven wins.

A McKinsey report notes that generative AI could automate or accelerate up to 60 percent of knowledge work tasks at a task level, not job level.

That distinction matters.

You benefit most when AI handles preparation, not outcomes.

It drafts, you refine. It analyzes, you interpret. It suggests, you decide. This is what artificial intelligence can do for you when used responsibly and repeatedly.

Content creation is another strong area, provided you stay involved.

AI helps you get unstuck, explore angles, and maintain consistency. Speed improves, quality stays human.

Areas Where Human Oversight Is Non Negotiable

What should never be hands off?

Anything involving accountability, safety, or long term consequences demands your oversight. Hiring decisions.

Medical guidance. Financial approvals. Legal interpretations.

AI can assist, but trusting outputs blindly is risky. Models can hallucinate, reflect bias, or miss recent changes.

In real work, errors scale fast. You are the safeguard.

Review assumptions. Verify facts. Apply judgment. This balance is essential if you want AI to remain an asset rather than a liability.

Used this way, AI becomes a quiet partner. Helpful. Fast. Limited.

And that is exactly where it belongs.

How to Use AI Without Losing Control

Stay in control by defining tasks clearly before using AI tools and setting specific outcomes. Always review and edit AI outputs to ensure accuracy and accountability. Treat results as drafts, so judgment and creativity remain fully with you.

AI is easiest to use when you are clear about who is in charge.

That may sound obvious, yet many problems begin when people treat AI like a thinking partner instead of a task executor. Control does not come from using fewer tools.

It comes from using them deliberately.

So how do you stay in control while still getting value?

Defining the Task Before Using the Tool

Before opening any AI tool, pause and define the job.

What outcome do you want? What format should the result follow? What constraints matter most?

This step sounds simple, yet it is often skipped.

When tasks are clearly framed, AI becomes predictable. When tasks are vague, AI becomes erratic.

That is not a flaw. It is a design reality of large language models.

Clarity upfront reduces correction later. Research in human computer interaction consistently shows that well specified inputs improve output reliability.

In practical terms, this means stating audience, tone, scope, and limits before asking anything. Think first. Then prompt.

Maintaining a Human in the Loop Process

Control is reinforced through review.

A human in the loop means you actively evaluate, edit, and validate AI output before it moves forward.

This practice is standard in high risk domains such as finance, healthcare, and enterprise decision systems.

Why? Because AI cannot take responsibility. You can. Verification is not mistrust.

It is professionalism. Oversight turns automation into augmentation.

Even when AI output looks polished, pause.

Ask whether the logic holds. Check sources. Adjust tone. This step protects quality and preserves accountability.

Treating AI Output as Raw Material

The most effective users treat AI output as a draft, not a decision.

Raw material invites shaping. Finished products invite complacency.

When you approach AI this way, creativity stays with you. Judgment stays with you.

AI simply accelerates momentum.

This mindset also prevents dependency. You remain the editor, strategist, and final authority.

Understanding what artificial intelligence can do for you becomes clearer once you stop expecting answers and start using inputs. The tool serves the process. You own the outcome.

Control is not about limitation. It is about intention.

The Right Mental Model for Using AI

Treat AI as leverage, not wisdom, to accelerate tasks without replacing thinking. Clear constraints improve output reliability and reduce errors. Always pair AI with human judgment to retain control and make informed decisions.

If you want consistent results from AI, the tool matters less than the mindset.

Many people approach AI as if it were a source of answers.

That is the wrong frame. AI works best when you treat it as leverage, a way to extend your effort and speed, not replace your thinking.

So what should you expect from it? And just as important, what should you not?

AI as Leverage Not Wisdom

Is AI intelligent in the human sense?

Not really.

Large language models are pattern engines.

They generate responses based on probabilities, not understanding.

This is why they can sound confident and still be wrong. When you recognize this, your usage becomes sharper.

You stop asking AI what to believe and start asking it how to explore.

Used correctly, AI helps you draft, compare, simulate, and organize.

It accelerates work that would otherwise drain time and attention. Wisdom still comes from experience, context, and consequence. AI simply shortens the distance between question and useful output.

According to research from Stanford’s Human-Centered AI Institute, people who treat AI as a collaborator rather than an authority make better decisions over time.

The mental model shapes the outcome.

Why Constraints Make AI More Powerful

Why do vague prompts fail so often?

Because AI responds to structure.

Clear constraints give AI direction. When you define the audience, tone, format, and goal, output quality improves dramatically.

This is not about prompt tricks. It is about thinking clearly before you ask.

Constraints also protect you.

They reduce hallucinations, limit scope creep, and keep results aligned with reality. The tighter your frame, the more reliable the response. This is a core principle in applied AI workflows and enterprise AI systems.

In practical terms, constraints turn AI from a novelty into a dependable assistant.

Pairing AI With Human Judgment for Better Outcomes

Where does the final responsibility sit? With you.

AI can surface options, highlight risks, and test assumptions. But judgment involves tradeoffs, ethics, timing, and accountability. These remain human responsibilities.

When you review, question, and refine AI output, quality improves and errors shrink.

This pairing is the real advantage. AI handles scale and speed.

You handle meaning and direction

That balance defines what artificial intelligence can do for you in real work, without eroding your skills or confidence.

Used this way, AI becomes quieter. More useful. And far more trustworthy.

FAQs

1. How does AI assist in daily tasks without replacing human judgment?

AI excels at repetitive, structured, and time-consuming tasks, allowing you to save time and focus on decision-making. It can draft documents, summarize research, or categorize information. However, AI cannot replace context-based judgment or ethical reasoning, which remain firmly human responsibilities. Using AI as a support tool ensures efficiency without losing control over outcomes.

2. Why do AI outputs sometimes provide incorrect or misleading information?

AI generates responses based on patterns in large datasets, not understanding. This can lead to confident errors or hallucinated information, especially in ambiguous contexts. Users must verify facts and cross-check outputs. Awareness of these limitations helps you balance productivity gains with accuracy, knowing where AI fails to provide reliable answers.

3. In which scenarios is AI most effective for business or personal use?

AI works best with narrow, clearly defined tasks such as automating reports, summarizing content, generating initial drafts, or managing routine data entry. When goals, format, and constraints are explicit, AI delivers consistent results. Tasks requiring nuance, ethical reasoning, or strategic thinking remain areas where human judgment is essential.

4. How can I prevent overreliance on AI for critical decisions?

Maintaining a human in the loop is crucial. Review AI outputs carefully before use, treat results as raw material, and make final decisions yourself. Overtrust can cause skill atrophy, propagate errors, or create false confidence. Human oversight ensures AI remains a productivity enhancer rather than a decision maker.

5. What are the risks of vague prompts or unclear instructions in AI use?

Vague prompts produce vague outputs. AI needs well-defined tasks, boundaries, and context to generate useful results. Ambiguous instructions can lead to irrelevant, inaccurate, or misleading information. Clear prompts improve efficiency and help find jobs for non techies. This happens while also reducing errors, and maximizing what artificial intelligence can do for you in real-world applications.

6. How can I integrate AI into workflows without losing control over quality?

Define tasks clearly, set constraints, and actively monitor outputs. Treat AI as an assistant that accelerates work rather than a substitute for reasoning. Using structured processes ensures outputs align with goals while minimizing errors, supporting both productivity and accuracy in your daily work.

7. What mental model should I adopt to use AI effectively?

Think of AI as leverage, not wisdom. Recognize its strengths in narrow, repetitive, and structured tasks while acknowledging limits in judgment, context understanding, and ambiguity. Pairing AI with human expertise allows you to benefit from speed and efficiency without compromising quality, ethics, or accountability.

Related Posts

Why AI Feels Overwhelming to Non-Techies: And Why That’s Okay

You’re Losing Customers Without AI Personalization—Non-Techies, Act Now!

What AI Search Sees That Most Creators Miss

Non-AI Human-Exclusive Competencies That AI Can’t Replace

Conclusion

What artificial intelligence can do for you is far more practical than most headlines suggest, but only if you understand its boundaries. You cannot expect AI to think, judge, or replace human insight.

It excels in narrow, well-defined tasks where precision and repetition matter.

Recognizing where AI fails, where overtrust can harm, and where human oversight is essential gives you a real advantage. Small errors can multiply, and skills can atrophy if you rely blindly, but intentional use preserves control and boosts productivity.

Ask yourself: are you using AI as a tool or letting it dictate outcomes?

The difference defines results. With clear task definition and disciplined review, AI becomes an amplifier, not a crutch.

Mastering AI means embracing its strengths, respecting its limits, and keeping human judgment central. Use it deliberately, and the impact is transformative.