AI Ethics Glossary 101: The Hidden Risks Lurking Inside Your AI

AI ethics glossary exists because AI is already making decisions that affect everyday life, even when people do not fully understand it.

Today AI systems approve loans, filter information, and influence outcomes, while the language behind them feels complex and distant.

That gap creates risk.

When AI phrases and terms are unclear, responsibility weakens and trust fades. With that, bias can slip through unnoticed.

This article on glossary offers a way forward by making important ideas easier to grasp, without losing their depth.

Clear. Practical. Human.

You will learn how ethical and legal terms show up in real situations.

Societal effects are explained just as simply. Why they matter right now, and how to engage with AI with awareness rather than fear.

This understanding changes perspective and the resulting clarity brings confidence.

Core Ethical Principles (AI ethics glossary)

| No. | Term | Simple Meaning | Why It Matters in Real Life |

|---|---|---|---|

| 1 | Accountability | Clearly knowing who is responsible when an AI system makes a decision or mistake. | If no one is responsible, problems get ignored and trust is lost. |

| 2 | Autonomy | Making sure people still have control and choice when using AI. | Too much AI control can take away human judgment without notice. |

| 3 | Beneficence | Using AI to do good and help people and society. | AI should improve lives, not just save time or money. |

| 4 | Non-maleficence | Making sure AI does not cause harm, even by accident. | Harm can happen slowly and may be noticed only after damage is done. |

| 5 | Justice | Treating all people fairly when AI is used. | If AI helps only some groups, unfairness grows. |

| 6 | Fairness | Ensuring AI does not favor or hurt people based on bias. | Unfair AI decisions quickly destroy trust and cause conflict. |

SOURCE: AIPOTS

Data and Privacy Terms

| No. | Term | Simple Meaning | Why It Matters in Real Life |

|---|---|---|---|

| 7 | Data Minimization | Collecting only the information that is truly needed, nothing extra. | When too much data is collected, the risk of misuse, leaks, or spying increases. |

| 8 | Data Ownership | Knowing who the data really belongs to, the user or the company. | If ownership is unclear, people lose control over their own information. |

| 9 | Data Privacy | Rules that protect how personal information is collected, stored, and shared. | Without privacy, personal details can be misused or exposed without warning. |

| 10 | Data Sovereignty | Laws that decide where data must be stored and processed. | Data stored in the wrong country may not be protected properly. |

| 11 | Informed Consent | Users clearly agreeing to how their data will be used. | Hidden or confusing consent removes real choice from users. |

How to understand These Terms:

These ideas explain how personal information should be handled when AI systems are used. They act like common-sense rules for respect and safety. When data is treated carelessly, people often suffer the consequences, not companies.

Why this matters today:

AI systems collect huge amounts of data every day. Most people do not know what is taken, where it goes, or how long it is kept. Problems usually appear later, after data is shared, sold, leaked, or used in ways users never expected.

Simple takeaway:

Good data practices are about respect. Collect less. Be clear. Ask permission honestly. Follow local rules. When people feel safe, they trust the system. When they feel watched or tricked, trust disappears and is very hard to rebuild.

Algorithmic Concepts.

| No. | Term | Simple Meaning | Why It Matters in Real Life |

|---|---|---|---|

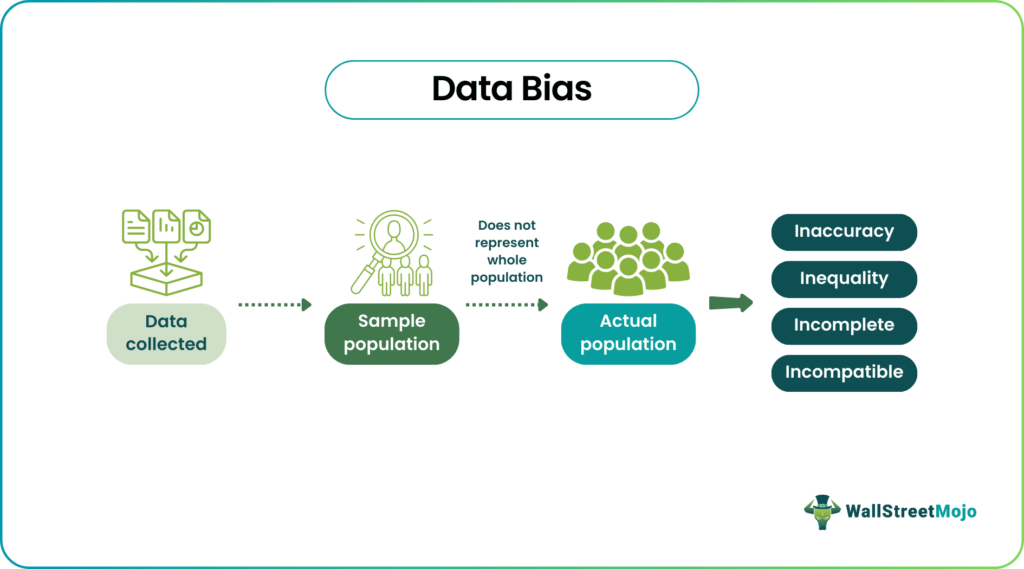

| 12 | Algorithmic Bias | When AI gives unfair results because it learned from biased data. | Biased AI can treat people unfairly without anyone noticing at first. |

| 13 | Algorithmic Transparency | Being able to clearly explain how an AI system makes decisions. | If decisions are hidden, people cannot trust or question them. |

| 14 | Algorithmic Accountability | Knowing who is responsible when an AI system makes mistakes. | Without responsibility, problems are ignored and repeated. |

| 15 | Explainability | Being able to explain why AI gave a specific answer or result. | People deserve to know why decisions affect their lives. |

| 16 | Interpretability | How easy it is for humans to understand how AI works inside. | If no one understands the system, fixing errors becomes hard. |

| 17 | Model Drift | When AI slowly becomes less accurate as time and data change. | Old AI decisions can become wrong without warning. |

How to understand These Terms:

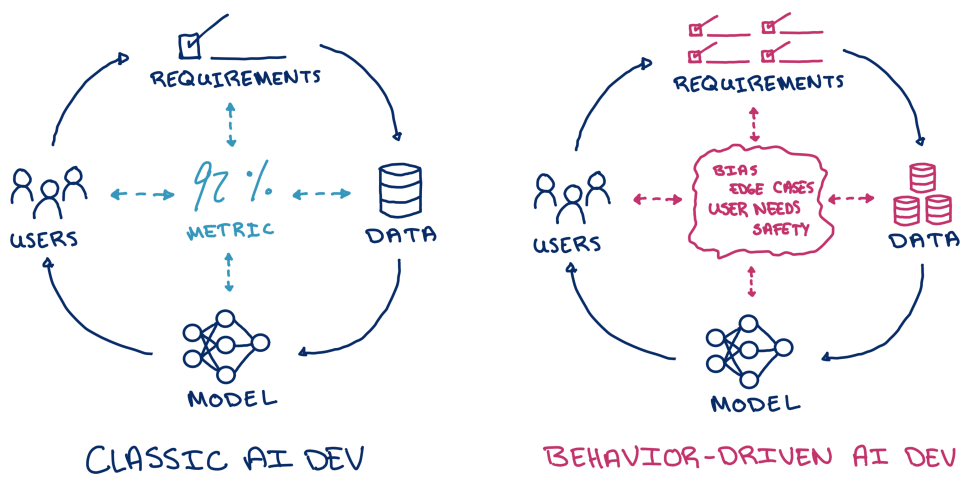

These ideas help explain how AI systems make decisions and where those decisions can quietly go wrong. Each term highlights a different weak spot where problems may begin without being noticed. By looking at them together, the table helps people recognize early warning signs before small issues turn into bigger failures.

Why this matters today:

AI is now used in many parts of daily life, including schools, banks, hospitals, and workplaces. Most people never see how these systems actually make decisions that affect them. Over time, as data and situations change, AI can start making mistakes, and those mistakes can impact many people at once.

Simple takeaway:

AI should always be fair, clear, and easy to understand. There must be a real person or team responsible for what the system does. This part of the AI ethics glossary helps people ask better questions and make smarter choices before placing their trust in AI systems.

SOURCE: UPDF

Legal & Policy Principles

| No. | Term | Simple Meaning | Why It Matters in Real Life |

|---|---|---|---|

| 18 | Regulation | Rules made by governments to control how AI can be used. | Rules help stop misuse and protect people from harm. |

| 19 | Liability | Deciding who is legally responsible if AI causes damage. | Without liability, victims may have no one to hold accountable. |

| 20 | Compliance | Following laws and ethical rules when using AI. | Ignoring rules can lead to fines, bans, and loss of trust. |

| 21 | Governance | Clear plans and roles for how AI is built, used, and checked. | Good governance prevents confusion and repeated mistakes. |

How to understand These Terms:

These terms explains how rules and responsibility work around AI systems. Each idea focuses on who makes the rules, who must follow them, and who answers when something goes wrong. Together, they show how order and fairness are maintained when powerful technology is used.

Why this matters today:

AI is spreading faster than laws can keep up. Many systems affect people’s money, jobs, education, and health. When rules are unclear or ignored, mistakes can harm large groups of people. Clear laws and responsibility help prevent chaos and misuse.

Simple takeaway:

AI should never operate in a rule-free space. There must be clear laws, clear responsibility, and clear oversight. When everyone knows their role, problems are easier to fix. This part of the AI ethics glossary reminds us that technology needs guidance, not blind freedom.

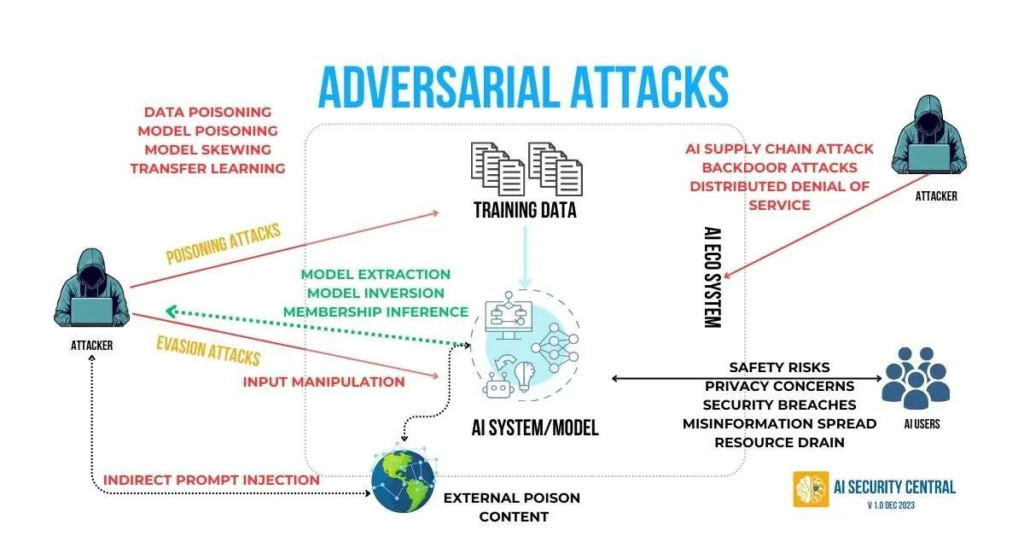

Security & Safety Terms

Source: AI Security Central

| No. | Term | Simple Meaning | Why It Matters in Real Life |

|---|---|---|---|

| 22 | Adversarial Attack | Tricking an AI system by giving it special or confusing input on purpose. | Tricked AI can make wrong decisions without knowing it is being fooled. |

| 23 | Robustness | How strong an AI system is when facing errors, pressure, or tricks. | Weak systems break easily and give unreliable results. |

| 24 | Safety Engineering | Designing AI so it does not cause harm or fail badly. | Poor design can lead to accidents, losses, or serious harm. |

| 25 | Secure AI | Protecting AI systems from hacking and misuse. | Unprotected AI can be taken over and used for damage. |

How to understand These Terms:

These terms explain how AI systems can be attacked, broken, or misused. Each term shows a different way things can go wrong if safety and protection are ignored. Together, they help people understand why AI must be built carefully, not just quickly.

Why this matters today:

AI systems are now connected to the internet, businesses, and public services. Hackers and bad actors actively look for ways to trick or damage these systems. When AI is weak or poorly protected, the harm can spread fast and affect many people at once.

Simple takeaway:

AI should be strong, safe, and well protected. It must be tested against tricks and mistakes before being trusted. This part of the AI ethics glossary reminds us that powerful technology also needs strong safety and security to protect everyone who depends on it.

Societal Impact Terms

| No. | Term | Simple Meaning | Why It Matters in Real Life |

|---|---|---|---|

| 26 | Digital Divide | The gap between people who have access to technology and those who do not. | People without access are left behind in education, jobs, and services. |

| 27 | Displacement | Losing jobs because machines or AI do the work instead. | Sudden job loss can affect families and communities deeply. |

| 28 | Economic Inequality | When AI helps some people become much richer while others struggle more. | Wealth gaps grow, creating social tension and unfairness. |

| 29 | Human Rights | Basic rights that every person should have, even when AI is used. | Technology should never take away dignity or freedom. |

How to understand These Terms:

These terms explain how AI affects people and society as a whole. Each term shows a different way technology can help some groups while harming others if care is not taken. Together, they highlight social risks that may grow quietly over time.

Why this matters today:

AI is changing how people learn, work, and earn money. While some benefit quickly, others lack access, skills, or protection. When these gaps widen, social problems increase and trust in technology decreases.

Simple takeaway:

AI should help everyone, not just a few. Access, fairness, and basic rights must be protected as technology grows. This part of the AI ethics glossary reminds us that progress means little if it leaves people behind or takes away their dignity.

Trust and Culture

Source: Salesfocus

| No. | Term | Simple Meaning | Why It Matters in Real Life |

|---|---|---|---|

| 30 | Trustworthy AI | AI systems that work as expected, stay safe, and follow ethical rules. | People will not use AI if they do not trust it. |

| 31 | Ethical AI | AI that respects human values and behaves in a fair and caring way. | Unethical AI can hurt people and damage society. |

| 32 | Responsible Innovation | Creating new technology while thinking ahead about risks and harm. | Moving too fast without care leads to avoidable mistakes. |

| 33 | Ethical Risk Assessment | Checking for possible harm before an AI system is used. | Finding risks early prevents bigger problems later. |

How to understand These Terms:

These terms explain ideas that help people decide whether AI can be trusted. Each term focuses on making sure AI behaves in a safe, fair, and responsible way. Together, they show how trust is built through careful planning and honest thinking.

Why this matters today:

AI systems are becoming part of daily life, from online services to workplaces. When people lose trust in technology, they stop using it or push back against it. Clear values and careful checks help avoid fear and misuse.

Simple takeaway:

Trust is not automatic. It must be earned. AI should be built with care, tested for harm, and guided by human values. This part of the AI ethics glossary reminds us that long-term success depends on responsibility, not speed.

AI Behavior & Constraints

Source: MLCMU

| No. | Term | Simple Meaning | Why It Matters in Real Life |

|---|---|---|---|

| 34 | Alignment | Making sure AI’s goals match what humans care about and value. | Misaligned AI may do things that seem right to the machine but harm people. |

| 35 | Value Sensitive Design | Creating AI systems that reflect human values like fairness and safety. | When design ignores values, AI can act in ways that upset or hurt people. |

| 36 | Human-in-the-Loop (HITL) | Humans are actively involved in AI decisions as they happen. | Keeping humans involved helps catch mistakes before they affect people. |

| 37 | Human-on-the-Loop (HOTL) | Humans watch AI decisions and step in only when necessary. | This allows AI to work quickly but still gives humans a safety net. |

| 38 | Human-out-of-the-Loop (HOOTL) | AI runs automatically without human oversight at the moment. | Mistakes may go unnoticed, so this is riskier if the AI is not well-tested. |

How to understand These Terms:

These terms show different ways humans and AI can work together. Some systems need humans actively making choices, while others work mostly alone. Each term explains how much control humans have and how AI should stay aligned with what people value.

Why this matters today:

AI is making more decisions every day, from banking to healthcare to content filtering. If AI is not aligned with human values, or if there is no human oversight, it can make mistakes that affect many people. Understanding these roles helps prevent harm.

Simple takeaway:

AI should reflect human values and have the right level of human involvement. Some decisions need people closely involved, others can be mostly automated. This part of the AI ethics glossary reminds us that combining human judgment with AI makes systems safer, fairer, and more trustworthy.

Decision Quality Terms

Source: TRINKA

| No. | Term | Simple Meaning | Why It Matters in Real Life |

|---|---|---|---|

| 39 | False Positives | When AI says something is a problem, but it is actually fine. | Mistakes like this can block harmless content, waste time, or cause confusion. |

| 40 | False Negatives | When AI misses a real problem and treats it as safe. | Dangerous issues may go unnoticed, leading to harm or errors. |

| 41 | Precision & Recall | Ways to measure how accurate and complete an AI system is. | High precision and recall mean the AI correctly identifies real issues without too many mistakes. |

| 42 | Benchmarking | Testing AI against agreed standards to see how well it works. | Without benchmarking, we cannot trust AI results or compare systems fairly. |

How to understand These Terms:

These terms explain how we check whether AI is making the right decisions. Some errors are obvious, while others are hidden. Each term shows a different way AI can succeed or fail, and how we measure its performance.

Why this matters today:

AI is used to filter content, detect fraud, spot diseases, and more. If it makes too many mistakes, either by flagging safe things or missing real problems, it can harm people or reduce trust. Measuring accuracy helps us know where AI works well and where it needs improvement.

Simple takeaway:

AI is not perfect, and errors happen. Understanding false positives, false negatives, and measuring accuracy helps keep AI reliable. This part of the AI ethics glossary reminds us that careful testing and checking are needed before trusting AI to make important decisions.

Standards and Frameworks

| No. | Term | Simple Meaning | Why It Matters in Real Life |

|---|---|---|---|

| 43 | Ethics Framework | A clear set of rules and guidelines to make sure AI is developed responsibly and fairly. | Following a framework helps teams make consistent, safe, and fair decisions when creating AI, reducing harm and building trust with users and society. |

| 44 | Best Practices | Tried-and-true approaches widely accepted as the right way to develop and use AI. | Using best practices helps avoid common mistakes, ensures quality, and improves user confidence in AI systems. |

| 45 | ISO/IEC AI Standards | International standards that provide guidance on AI safety, quality, and reliability. | Standards make sure AI systems meet global expectations and can be compared, trusted, and safely used across different countries and industries. |

| 46 | Impact Assessment | Reviewing the ethical, social, and legal effects of AI before it is put into use. | Assessing impact early helps spot potential harm, inequality, or unintended consequences, allowing corrections before real people are affected. |

How to understand These Terms:

These terms shows tools and rules that guide the creation and use of AI. Each term is about planning, checking, and following proper methods to prevent problems. Together, they give a structured way to build AI responsibly, making it safer, fairer, and more trustworthy for everyone.

Why this matters today:

AI is being used in more areas than ever, from healthcare to finance to education. Without clear guidelines, companies and developers may make mistakes that affect people’s lives, privacy, or rights. Using standards, frameworks, and assessments reduces risk and ensures AI benefits society.

Simple takeaway:

Following rules, best practices, and standards is not optional. They protect people and build trust in AI systems. This part of the AI ethics glossary reminds us that careful planning, assessment, and guidance are key to creating AI that is safe, fair, and reliable.

Emerging and Interdisciplinary Terms

| No. | Term | Simple Meaning | Why It Matters in Real Life |

|---|---|---|---|

| 47 | AI for Good | Using AI to solve social, environmental, and humanitarian problems. | AI can help tackle climate change, health issues, and poverty. Focusing on positive outcomes ensures technology improves lives and supports communities. |

| 48 | Eco-AI | Designing AI systems to use less energy and reduce environmental harm. | AI consumes lots of electricity. Eco-friendly AI helps protect the planet and makes technology sustainable for the future. |

| 49 | Synthetic Data | Creating fake but realistic data to train AI while keeping real information private. | Synthetic data protects people’s personal details while allowing AI to learn and improve safely without risking privacy. |

| 50 | De-Identification | Removing names, addresses, or any personal details from datasets. | This makes data safer to use, preventing identity theft and protecting privacy while still letting AI learn from it. |

| 51 | Algorithmic Audit | Independent checking of AI systems to ensure fairness, accuracy, and no hidden bias. | Audits catch unfair results or mistakes before AI affects people, improving reliability and public trust in technology. |

How to understand These terms:

These terms show ways AI can be used responsibly while protecting people and the planet. Each term is about making AI safer, fairer, and helpful. Together, they highlight approaches that focus on positive outcomes, privacy, and fairness, ensuring technology is used for good and does not harm anyone.

Why this matters today:

AI is growing fast and touches almost every part of life. Without careful design, it can waste energy, invade privacy, or unfairly affect people. Using eco-friendly methods, protecting data, and auditing AI systems help ensure technology benefits society while minimizing harm.

Simple takeaway:

AI should not just be smart; it should be kind, safe, and fair. Protecting privacy, reducing environmental impact, and checking fairness make AI trustworthy. This part of the AI ethics glossary reminds us that technology works best when it respects people, society, and the planet.

Governance, Control, and Oversight

| No. | Term | Simple Meaning | Why It Matters in Real Life |

|---|---|---|---|

| 52 | Human Oversight | Systems that let people supervise, step in, or stop AI decisions when needed. | Oversight ensures AI does not act unchecked, preventing mistakes and keeping people in control of important outcomes. |

| 53 | Decision Accountability | Clear rules about who is responsible when AI makes a decision. | Knowing responsibility helps solve problems quickly and ensures fairness, trust, and legal compliance. |

| 54 | Auditability | The ability to check and review how an AI system behaved after it made decisions. | Auditing shows why things went wrong, which helps prevent repeated mistakes and builds confidence in AI. |

| 55 | Traceability | Keeping records of where data came from, model versions, and how decisions were made. | Traceability allows developers and regulators to follow the AI’s path and fix errors effectively. |

| 56 | Oversight Fatigue | When humans supervising AI become tired or too dependent on automation and stop noticing mistakes. | Fatigue reduces safety and increases the chance of errors slipping through, especially in long or complex processes. |

How to understand These terms:

These terms explains ways humans can monitor and control AI. Each term highlights tools, rules, or risks related to oversight. Together, they show how supervision, records, and accountability help prevent mistakes and ensure AI acts as intended, even in complex or automated systems.

Why this matters today:

AI systems make many decisions faster than humans can check. Without proper oversight or clear responsibility, errors can spread quickly. Fatigue, poor record-keeping, or lack of accountability increases risk. Understanding these terms helps people stay alert, correct problems, and keep AI trustworthy.

Simple takeaway:

Humans should always remain in the loop, know who is responsible, and have ways to check and trace AI decisions. Managing oversight carefully prevents errors and builds confidence. This part of the AI ethics glossary reminds us that AI works best when humans stay involved and accountable.

Data Integrity and Lifecycle

| No. | Term | Simple Meaning | Why It Matters in Real Life |

|---|---|---|---|

| 57 | Data Provenance | Keeping records of where data came from, how it was collected, and any changes made to it. | Knowing data origins ensures trust, helps fix mistakes, and allows proper use of information without errors or bias. |

| 58 | Data Quality | Ensuring data is correct, complete, consistent, and reliable. | Poor quality data leads to wrong AI decisions, which can harm people, waste resources, or reduce trust in technology. |

| 59 | Consent Fatigue | When users get tired of too many or unclear consent requests and stop paying attention. | Fatigued users may agree to things without understanding them, creating privacy risks and ethical issues. |

| 60 | Secondary Data Use | Using data for purposes other than what it was originally collected for. | Using data beyond its original purpose can violate trust, laws, and personal privacy if not handled carefully. |

| 61 | Data Leakage | When sensitive or private data accidentally gets exposed. | Leaks can lead to identity theft, financial loss, or harm to individuals whose data is exposed. |

How to understand These Terms:

These terms explain how data should be handled safely and responsibly in AI systems. Each term highlights risks, responsibilities, and practices for keeping data accurate, private, and trustworthy. Together, they provide a clear view of the safeguards needed to maintain confidence in AI-driven decisions.

Why this matters today:

AI depends on large amounts of data to work effectively. Mistakes, poor quality, misuse, or leaks can affect individuals and society. Ensuring proper documentation, consent, and careful data handling protects users and prevents harm while maintaining ethical AI practices.

Simple takeaway:

Data must be accurate, tracked, and used responsibly. Users should not be overwhelmed, and sensitive information must be protected. This part of the AI ethics glossary reminds us that AI works best when data is treated with care, respect, and transparency.

Model Development and Deployment

No. | Term | Simple Meaning | Why It Matters in Real Life |

|---|---|---|---|

| 62 | Training Data Bias | This means the AI learns from data that is not fair or balanced. If the data mostly shows one group or one situation, the AI copies that bias. It then makes unfair or wrong decisions for people who were not well represented. | Biased AI can treat people unfairly in jobs, loans, schools, or healthcare. It may favor some and ignore others without meaning to. |

| 63 | Overfitting | Overfitting happens when an AI memorizes examples instead of learning patterns. It works well during training but fails when faced with new or real-life situations that look a little different. | In real life, things change. An AI that overfits breaks easily and gives wrong answers when it matters most. |

| 64 | Underfitting | Underfitting means the AI is too simple and does not learn enough. It misses important details and patterns, so its answers stay shallow, basic, or incorrect. | This leads to poor predictions and weak decisions, especially in areas where accuracy and understanding are important. |

| 65 | Model Opacity | Model opacity means we cannot see or understand how the AI makes decisions. It feels like a black box where answers come out without explanations. | When people do not understand decisions, trust is lost. It also becomes hard to fix mistakes or prove fairness. |

| 66 | Deployment Context Shift | This happens when an AI is used in a place or situation it was not trained for. The world changes, but the AI stays the same. | The AI may act strangely or fail because it does not understand the new environment or rules. |

How to understand these terms:

Think of AI like a student learning from books. If the books are biased, learning becomes unfair. Also, if the student memorizes answers, real exams fail. If lessons are too simple, learning stays weak. When teachers cannot explain grading, trust drops. This is the foundation of an AI ethics glossary.

Why this matters today:

AI is now used in schools, hospitals, hiring, and money decisions. Small mistakes can affect real lives. When models are unclear or trained wrongly, people suffer without knowing why. Understanding these ideas helps us ask better questions and demand safer, fairer systems built for real human use.

Simple takeaway:

AI is powerful but not perfect. It learns only what we show it and where we place it. When data, design, or use goes wrong, outcomes suffer. Knowing these basics from an AI ethics glossary helps anyone, even a beginner, stay aware, alert, and responsible around AI systems.

Legal, Compliance, and Risk

| No. | Term | Simple Meaning | Why It Matters in Real Life |

|---|---|---|---|

| 67 | Due Diligence | Due diligence means taking proper care before using an AI system. People check if the AI is safe, fair, and legal. They try to spot problems early instead of fixing damage later. | This helps prevent harm, lawsuits, and public backlash by thinking ahead before AI affects real people. |

| 68 | Regulatory Alignment | This means making sure AI follows current laws, rules, and government policies. Since rules change often, AI systems must be updated regularly to stay legal. | If AI breaks laws, companies can face fines, bans, or loss of trust from users and regulators. |

| 69 | Risk Allocation | Risk allocation decides who is responsible if AI causes harm. It asks who should fix the problem or pay for the damage caused by the system. | Clear responsibility prevents confusion and ensures victims are not left without help or answers. |

| 70 | Duty of Care | Duty of care means having a responsibility to protect people from harm that can be predicted. If harm is likely, steps must be taken to prevent it. | Ignoring known risks can hurt users and lead to serious legal trouble for organizations. |

| 71 | Explainability Compliance | This means AI decisions must be explainable in a way people can understand. Laws may require clear reasons for important decisions made by AI. | People deserve to know why an AI said yes or no, especially in jobs, loans, or healthcare. |

How to understand these terms:

Think of AI like a machine used in public places. Before using it, safety checks are done. Rules are followed. Responsibility is clear if something breaks. Care is taken to avoid harm. And decisions are explained clearly. That is the idea behind an AI ethics glossary.

Why this matters today:

AI now helps decide who gets hired, approved, or treated. When laws, safety, or responsibility are ignored, people can be harmed silently. Understanding these ideas helps society demand safer systems and fair treatment instead of blind trust in technology.

Simple takeaway:

AI must follow rules, protect people, and explain itself. Careful checks, clear responsibility, and honest explanations matter. Knowing these basics from an AI ethics glossary helps anyone understand why ethical and legal thinking is essential when AI enters real life.

Security, Misuse, and Abuse

| No. | Term | Simple Meaning | Why It Matters in Real Life |

|---|---|---|---|

| 72 | Model Inversion Attack | This is when someone tries to guess or rebuild the data used to train an AI by studying its answers. Private or sensitive information can be exposed without permission. | This can leak personal data, medical records, or confidential details that were never meant to be shared. |

| 73 | Data Poisoning | Data poisoning happens when bad data is secretly added during AI training. This tricks the AI into learning wrong or harmful behavior from the start. | A poisoned AI can give dangerous advice, make biased decisions, or fail when people rely on it most. |

| 74 | Dual-Use Risk | Dual-use risk means an AI made for good can also be used for harm. The same tool can help people or hurt them, depending on who controls it. | Helpful tools can be misused for scams, spying, or spreading false information at scale. |

| 75 | Malicious Automation | This means using AI to automate harmful actions like scams, fake messages, or cyberattacks. AI makes bad actions faster and harder to stop. | One person can harm thousands quickly, making abuse cheaper, faster, and more damaging. |

| 76 | Security-by-Design | Security-by-design means thinking about safety from the very beginning. Protection is built into the system instead of added later. | Early security reduces hacks, misuse, and costly fixes after damage has already occurred. |

How to understand these terms:

Imagine AI as a powerful tool like electricity. If wires are exposed, people get hurt. If rules are ignored, damage spreads fast. These terms explain how AI can be attacked, misused, or protected. They form an important part of an AI ethics glossary for everyday understanding.

Why this matters today:

AI tools are everywhere, from phones to offices. When security is weak, bad actors can misuse them at scale. Attacks are faster, smarter, and harder to detect. Understanding these risks helps people demand safer systems before harm becomes widespread.

Simple takeaway:

AI security is not optional. If safety is ignored early, damage grows quickly. Strong design, clean data, and misuse awareness matter. Learning these basics from an AI ethics glossary helps people stay alert, informed, and protected in an AI-powered world.

Societal and Cultural Impact

| No. | Term | Simple Meaning | Why It Matters in Real Life |

|---|---|---|---|

| 77 | Automation Bias | Automation bias means people trust AI answers too much, even when they feel something is wrong. They stop thinking for themselves and follow the machine blindly. | This can cause mistakes in schools, hospitals, or offices when human judgment should have stepped in. |

| 78 | Social Scoring | Social scoring ranks people based on their behavior, actions, or data. It creates a score that can affect how others treat them. | A low score can limit jobs, travel, or services, even if the data is unfair or wrong. |

| 79 | Surveillance Creep | Surveillance creep happens when monitoring slowly increases over time. What starts as safety tracking becomes constant watching. | People lose privacy without noticing, and normal life begins to feel controlled or watched. |

| 80 | Cultural Bias | Cultural bias means AI favors one culture’s values and ignores others. It reflects the views of those who built or trained it. | This can erase traditions, misunderstand languages, and treat some groups unfairly or disrespectfully. |

| 81 | Power Asymmetry | Power asymmetry means big institutions control AI, while individuals have little say. One side has more data, tools, and power. | This imbalance can lead to abuse, unfair rules, and people feeling helpless against systems they cannot question. |

How to understand these terms:

Think of AI as a voice that grows louder over time. If people stop questioning it, problems grow. Not only that, if watching increases quietly, freedom shrinks. If one culture or group controls tools, others get ignored. These ideas are core to an AI ethics glossary explained simply.

Why this matters today:

AI now influences opinions, choices, and opportunities. When trust becomes blind and power becomes unequal, society changes quietly. Understanding these risks helps people stay aware, protect dignity, and push back when technology crosses healthy boundaries.

Simple takeaway:

AI shapes society, not just machines. Trust should be balanced, privacy protected, and power shared. When people understand these ideas from an AI ethics glossary, they can stay informed, cautious, and in control instead of being silently shaped by technology.

Trust, Transparency, and Perception

| No. | Term | Simple Meaning | Why It Matters in Real Life |

|---|---|---|---|

| 82 | Algorithmic Legitimacy | This means people believe AI decisions are fair and reasonable. They feel the system treats everyone properly and does not act randomly or secretly. | Without this belief, people stop trusting AI systems and resist using them, even if they are useful. |

| 83 | Trust Calibration | Trust calibration means trusting AI only as much as it deserves. People should know what AI can and cannot do. | Too much trust causes blind mistakes. Too little trust wastes helpful tools that could support good decisions. |

| 84 | Transparency Theater | This happens when companies explain AI in a fake or shallow way. The words sound good but explain nothing useful. | People feel informed but are actually confused, which hides problems instead of solving them. |

| 85 | Disclosure Obligations | This means users must be told when AI is involved in a decision. Nothing should be hidden. | People deserve to know if a machine, not a human, is judging or deciding their outcome. |

| 86 | User Recourse | User recourse means people can question, appeal, or challenge AI decisions that affect them. | Without this option, people feel powerless when AI makes mistakes or unfair choices. |

How to understand these terms:

Imagine AI as a referee in a game. Players must trust the referee, understand the rules, know when a machine is judging, and be allowed to complain if a call feels wrong. These ideas form a key part of an AI ethics glossary explained simply.

Why this matters today:

AI now decides loans, jobs, grades, and access to services. If trust is wrong, explanations are fake, or appeals are blocked, people suffer quietly. Understanding these terms helps society demand fairness, honesty, and human control over powerful systems.

Simple takeaway:

AI should be trusted carefully, explained clearly, and questioned when needed. People must know when AI is used and have the right to challenge it. Learning these basics from an AI ethics glossary helps users stay informed, confident, and protected.

Human-AI Interaction

| No. | Term | Simple Meaning | Why It Matters in Real Life |

|---|---|---|---|

| 87 | Cognitive Offloading | Cognitive offloading means people let AI do the thinking for them. Instead of deciding or judging, they rely on the machine’s answer. | Over time, people may stop thinking deeply, questioning results, or trusting their own judgment. |

| 88 | Deskilling | Deskilling happens when people lose skills because AI does tasks for them again and again. Practice slowly disappears. | When AI fails or is unavailable, people may struggle to do basic tasks they once knew well. |

| 89 | Human Agency Preservation | This means making sure humans stay in control, even when AI helps them. AI should support decisions, not replace them fully. | People should feel empowered, not controlled, by technology that affects their lives. |

| 90 | Interaction Framing | Interaction framing is how AI presents answers or suggestions. The wording and tone can strongly influence how users think or act. | Poor framing can push people toward bad choices without them realizing it. |

| 91 | Psychological Safety | Psychological safety means protecting users from stress, fear, confusion, or emotional harm caused by AI interactions. | Unsafe AI can damage confidence, mental health, or decision-making, especially for young or vulnerable users. |

How to understand these terms:

Think of AI like a helper in school. If it gives answers too easily, students stop learning. Also, if it speaks too strongly, students follow without thinking. If it scares or confuses them, learning suffers. These ideas help explain safe human-AI relationships in an AI ethics glossary.

Why this matters today:

AI tools are used daily for writing, learning, planning, and advice. When people rely too much, skills fade and control weakens. Understanding these terms helps users stay mentally strong, confident, and aware while working with intelligent systems.

Simple takeaway:

AI should help humans think better, not think less. Skills, control, and emotional safety must come first. When people understand these ideas from an AI ethics glossary, they can use AI wisely without losing confidence, judgment, or independence.

Ethics, Standards, and Evaluation

| No. | Term | Simple Meaning | Why It Matters in Real Life |

|---|---|---|---|

| 92 | Ethical Benchmarking | Ethical benchmarking means comparing one AI system with clear ethical standards. It checks whether the AI behaves fairly, safely, and responsibly compared to what is expected. | This helps people see which systems are trustworthy and which ones may cause harm or unfair treatment. |

| 93 | Ethics-by-Design | Ethics-by-design means thinking about fairness, safety, and responsibility while building AI, not after problems appear. Ethics become part of the system from day one. | This prevents future harm and reduces costly fixes after people are already affected. |

| 94 | Continuous Monitoring | Continuous monitoring means regularly checking how AI behaves after it is launched. Problems are spotted as they appear, not years later. | AI changes over time. Ongoing checks help catch bias, errors, or misuse early. |

| 95 | Third-Party Review | Third-party review means outside experts examine an AI system. They are not connected to the creators and give an honest opinion. | Independent reviews build trust and uncover hidden risks that insiders may miss. |

| 96 | Ethical Debt | Ethical debt builds up when ethical problems are ignored to save time or money. These problems grow bigger and harder to fix later. | Ignored ethics can lead to serious harm, public anger, and legal trouble in the future. |

How to understand these terms:

Think of AI like a building. Standards check its strength, ethics are built into the design, inspections continue after opening, outside inspectors verify safety, and ignored cracks become dangerous over time. These ideas sit at the heart of an AI ethics glossary for beginners.

Why this matters today:

AI systems are growing fast, often faster than careful thinking. Without monitoring and reviews, small ethical issues can spread widely. Understanding these ideas helps people demand safer technology before problems affect millions.

Simple takeaway:

Ethical AI needs planning, checking, and honesty. When ethics are delayed, risks grow. Clear standards, regular reviews, and outside opinions matter. Learning these basics from an AI ethics glossary helps people support responsible AI that protects real lives.

Long-Term and Emerging Risks

| No. | Term | Simple Meaning | Why It Matters in Real Life |

|---|---|---|---|

| 97 | Capability Overhang | When AI becomes more powerful than the rules, oversight, or safety systems can handle. | Unchecked capabilities can lead to errors or misuse that humans may not control in time, increasing risk to people or organizations. |

| 98 | Value Drift | When AI slowly behaves in ways that no longer match human values or intentions. | Small changes over time can make AI decisions unfair, unsafe, or harmful if not monitored and corrected. |

| 99 | Socio-Technical Systems | How AI, humans, organizations, and social rules interact together. | Understanding this helps prevent unintended consequences when technology affects people, jobs, and institutions. |

| 100 | Long-Term Harm | Negative effects of AI that appear gradually or accumulate over time. | Immediate results may look fine, but long-term consequences can be serious if risks are ignored. |

| 101 | Systemic Risk | Failures that spread across industries, society, or countries. | One AI failure can cascade, causing wide-reaching problems affecting many people or sectors. |

How to understand These Terms:

These terms explains AI risks that can grow over time or affect society on a large scale. Each term highlights a way AI might exceed control, change behavior, or interact with humans and institutions. Understanding these helps anticipate problems before they become serious and widespread.

Why this matters today:

AI is becoming more powerful and interconnected with human systems. Small mistakes, unchecked power, or misaligned behavior can create problems that spread quickly. Being aware of these long-term and systemic risks is essential to keep AI safe and beneficial.

Simple takeaway:

We must monitor AI aligned with human values and integrated responsibly into society. Planning for future consequences helps prevent harm. This part of the AI ethics glossary reminds us that thinking ahead is just as important as managing AI today.

FAQs

1. Why do people keep talking about fairness and accountability in artificial intelligence systems?

Fairness and accountability matter because AI increasingly influences hiring, lending, healthcare, content moderation, and public services. Without clear ethical grounding, these systems can quietly reinforce bias, exclude certain groups, or make decisions no one can properly explain or challenge.

2. How does data privacy connect to responsible use of machine learning tools?

AI systems learn from massive datasets, often containing personal or sensitive information. Understanding privacy principles helps readers see how data collection, consent, storage, and reuse can either protect individuals or expose them to misuse, surveillance, or exploitation.

3. What risks should non-technical professionals be aware of when using AI-powered tools?

Non-technical users often assume AI outputs are neutral or accurate by default. Ethical awareness helps them recognize risks such as hidden bias, overconfidence in automated decisions, lack of human oversight, and unintended social consequences.

4. How do government rules and industry policies shape ethical AI development?

Regulations and internal policies determine what AI systems are allowed to do, how they are audited, and who is responsible when harm occurs. Studying these ideas helps readers understand where innovation ends and accountability begins.

5. Why is transparency important when organizations deploy automated decision systems?

Transparency builds trust. When people understand how decisions are made, even at a high level, they are more likely to accept outcomes and question errors. Ethical frameworks emphasize explainability so AI does not become a black box of authority.

6. How can understanding AI ethics help leaders make better long-term decisions?

Ethical literacy helps leaders anticipate reputational, legal, and societal risks before they escalate. It shifts decision-making from short-term efficiency to long-term sustainability, trust, and alignment with human values.

7. What is the benefit of learning ethical AI concepts before problems arise?

Learning these concepts early allows individuals and organizations to design safeguards proactively rather than reacting to crises. It encourages thoughtful deployment, better human oversight, and healthier human–AI collaboration from the start.

Related Posts

Ethical AI As a System of Responsibility, Not a Buzzword

Can You Trust AI? The Ethics & Explainability of AI Content

What AI Can Do for You, and Where It Fails

AI’s Future Disruption: The Silent Threat to Human Relevance

Conclusion

AI ethics glossary is not just a reference, it is a lens for seeing how AI shapes decisions and everyday life. When language becomes clear, judgment improves and responsibility feels closer.

This matters because ethical gaps rarely announce themselves. They appear quietly.

This glossary helps you recognize those moments before harm becomes routine. It encourages thoughtful use rather than blind adoption.

It also builds confidence to question outcomes instead of accepting them by default. As AI continues to influence work and public systems, clarity becomes a form of protection.

Understanding these terms is not about mastery. It is about awareness. And awareness is where better choices begin.