You Can’t Switch Off AI: The False Hope of Pulling the Plug

You can’t switch off AI, no matter how loudly critics demand pulling the plug on AI in moments of fear or uncertainty.

That sentence may sound dramatic. It is not. It is structural reality.

Whenever a powerful technology advances faster than society’s comfort level, the first instinct is control. The second instinct is shutdown.

Today, the phrase pulling the plug on AI circulates in boardrooms, classrooms, policy debates, and family WhatsApp groups.

It feels like a clean solution. Just stop it, pause it. Just unplug it.

But the world does not work that way.

Lets take a look behind the scenes.

The Myth of a Master Switch

People imagine AI as a giant central machine humming in a secret lab.

If that were true, pulling the plug on AI would be as simple as flipping a breaker.

Instead, AI development is distributed across dozens of organizations, thousands of researchers, and millions of servers across continents.

Source: PluggedIn

Consider major players such as OpenAI, Google DeepMind, Anthropic, and Meta.

Each operates independently.

Each has its own funding, strategy, and roadmap.

Even if one organization stopped overnight, others would continue. If every major Western lab paused, research would accelerate elsewhere.

Technology does not wait politely.

There is no central switch or a global control room. There is no red button.

The idea that you can’t switch off AI is not philosophical. It is logistical.

Open Source Changed Everything

The second reason you can’t switch off AI is open source.

When advanced models are released publicly, their core parameters can be downloaded, copied, modified, and run on independent hardware.

Once knowledge escapes into the open, it cannot be recalled.

History proves this pattern.

You cannot “un-release” scientific breakthroughs or mathematical discoveries.

Also, you cannot erase code that has already been cloned thousands of times.

Pulling the plug on AI would require deleting not just servers, but human memory, academic papers, GitHub repositories, and offline backups sitting in private data centers.

That is impossible.

The toothpaste is out of the tube.

Economic Gravity Is Too Strong

Technology that drives productivity does not get abandoned easily.

AI is now embedded in:

• Supply chain optimization

• Fraud detection systems

• Medical diagnostics

• Drug discovery

• Customer service automation

• Financial risk modeling

Trillions of dollars in economic value are connected to AI systems. Businesses are restructuring operations around automation.

Governments are modernizing public services with machine learning tools.

Imagine asking global markets to voluntarily surrender efficiency gains because society feels uneasy.

Unlikely.

When competitive advantage is at stake, no nation wants to fall behind. This dynamic resembles the technological rivalry between the United States and the China.

Strategic technologies rarely slow down once geopolitical competition intensifies.

AI’s Economic Lock-In

| Area of AI Integration | How AI Is Embedded in Operations | Economic Dependency and Impact |

|---|---|---|

| Supply Chain Optimization | AI predicts demand, manages inventory, routes logistics, and reduces delays across global networks | Prevents costly disruptions, lowers operational waste, and protects billions in trade flows across industries |

| Fraud Detection Systems | Machine learning flags suspicious transactions in real time across banking and digital payments | Saves financial institutions billions annually; removing AI increases fraud exposure and insurance costs |

| Medical Diagnostics | AI analyzes imaging, pathology data, and patient records to assist early disease detection | Speeds diagnosis, reduces human error, and lowers long term healthcare expenses |

| Drug Discovery | Algorithms simulate molecular interactions and shorten research cycles | Cuts years off development timelines, saving billions in R and D expenditure |

| Customer Service Automation | AI chatbots and voice systems handle high volume inquiries 24/7 | Reduces staffing costs, improves response times, and scales service without proportional hiring |

| Financial Risk Modeling | AI evaluates credit risk, market volatility, and portfolio exposure instantly | Enhances capital allocation, stabilizes markets, and supports regulatory compliance |

Pulling the plug on AI would mean surrendering strategic leverage. That is not how global power politics works.

AI Is Already Infrastructure

There is another uncomfortable truth.

AI is no longer experimental.

It is operational infrastructure.

Banks use AI to detect suspicious transactions in milliseconds. Hospitals rely on machine learning for imaging analysis.

Cybersecurity systems scan billions of events per day using intelligent automation.

Turning it off would not feel like switching off a chatbot.

It would feel like switching off electricity.

Modern societies are interwoven with digital intelligence layers. Removing them abruptly would cause systemic disruption across healthcare, finance, defense, and logistics.

You can’t switch off AI because it is no longer a product.

It is becoming plumbing.

And societies rarely rip out plumbing mid-construction.

Fear Fuels the Fantasy

Calls for pulling the plug on AI often spike during moments of alarm. A viral deepfake spreads misinformation.

A job sector announces layoffs due to automation. A prominent researcher warns about long term risks.

Fear amplifies urgency.

But fear also simplifies complex realities into dramatic gestures.

Shut it down.

Pause it.

Ban it.

The problem is coordination.

To truly switch off AI globally, every major nation would need to agree simultaneously.

Enforcement mechanisms would need to monitor private labs, universities, startups, and military research programs. Rogue actors would need to be neutralized.

Black market computing clusters would need to be tracked.

This level of global compliance has never been achieved in any high value technological domain.

Not nuclear science, or cyber weapons.

Not genetic engineering.

Why would AI be different?

Innovation Does Not Reverse

Throughout history, transformative technologies have followed a pattern.

First, resistance.

Then regulation.

Then normalization.

Printing presses were feared. Electricity was feared.

The internet was feared. Social media was feared. Each brought unintended consequences. None were unplugged.

Societies adapt.

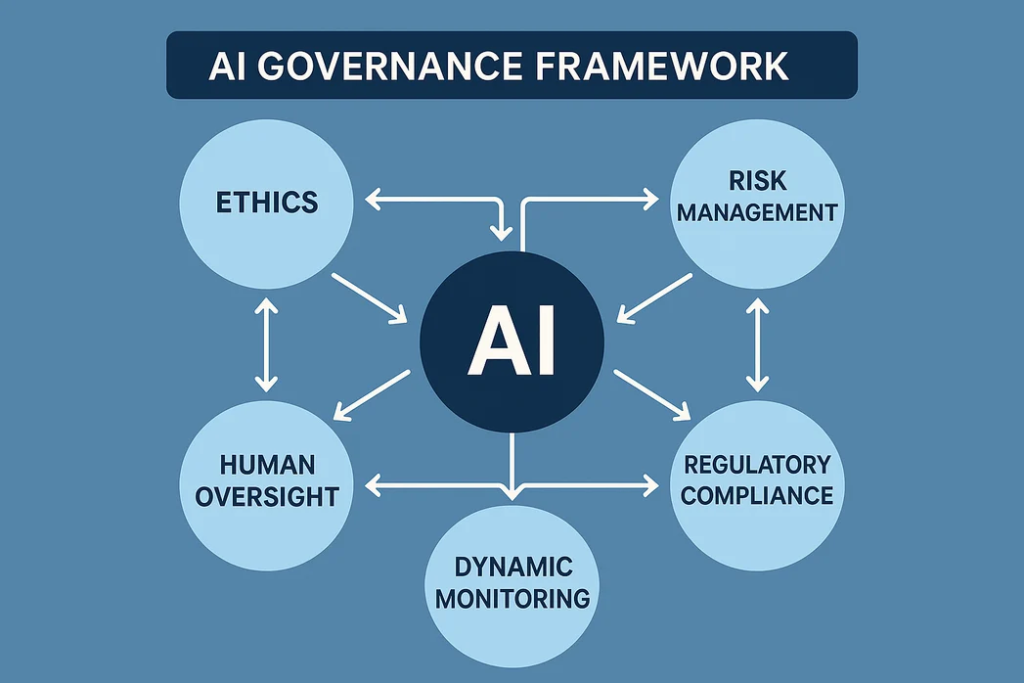

They build governance frameworks.

Societies even implement safety standards.

They create cultural norms.

But they rarely erase innovation entirely.

Pulling the plug on AI assumes humanity can collectively choose technological regression. History suggests otherwise.

The Real Question Is Governance

Source: Floodlight New Marketing

If you can’t switch off AI, what should society focus on?

Three priorities emerge.

First, safety research must scale alongside capability research.

Organizations like Anthropic emphasize alignment and interpretability.

More funding should flow toward understanding model behavior, not just expanding performance.

Second, regulatory frameworks must evolve intelligently.

Blanket bans tend to push development underground. Smart oversight encourages transparency while discouraging reckless deployment.

Third, individuals must increase literacy.

Non tech professionals, educators, coaches, and business leaders need working knowledge of AI systems. Ignorance fuels panic. Competence builds stability.

The future will not reward denial.

It will reward adaptation.

A Psychological Shift

There is also a deeper layer to this debate.

When people say pulling the plug on AI, what they often mean is pulling the plug on uncertainty.

AI represents acceleration.

Acceleration disrupts identity. If your profession changes, your sense of competence is challenged.

That discomfort is real.

But technological evolution has always forced reinvention.

Farmers adapted to industrialization. Typists adapted to word processors. Retailers adapted to e commerce.

The pattern is not extinction of humanity. It is transformation of roles.

You can’t switch off AI because you cannot switch off human curiosity and competition. Innovation emerges from incentives, ambition, and problem solving instincts.

Those traits are not disappearing.

What Would It Actually Take Internationally?

To genuinely accomplish pulling the plug on AI, several extreme conditions would need to align:

• Global treaty with universal compliance

• Strict monitoring of compute resources

• Criminalization of unsanctioned model training

• Physical destruction of large scale data centers

• Cultural consensus that AI development is taboo

Even then, enforcement would be imperfect.

And the cost to economic growth would be staggering.

The cure would likely feel worse than the disease.

Global AI Shutdown Reality

| What Switching Off AI Would Entail | Why It Is Impractical | Risks and Scale of Difficulty |

|---|---|---|

| Global treaty with universal compliance | Requires alignment between rivals like United States, China, Russia, and the European Union, despite deep geopolitical mistrust | Defection risk is high; one non compliant nation gains strategic advantage. Enforcement would resemble nuclear level surveillance |

| Strict monitoring of global compute resources | AI training can be distributed, hidden, or run in smaller clusters across jurisdictions | Massive privacy trade offs; global digital surveillance state; extremely high technical and political resistance |

| Criminalization of unsanctioned model training | Open source models circulate freely online and can be modified privately | Black markets emerge; enforcement becomes endless cat and mouse; innovation shifts underground |

| Physical destruction of large scale data centers | Data centers power banking, healthcare, logistics, and defense systems | Economic shockwaves; infrastructure collapse; trillions in losses; disruption of critical services |

| Global cultural consensus that AI is taboo | AI is embedded in education, medicine, finance, media, and governance | Cultural fragmentation; youth and startups resist; impossible to sustain long term compliance |

Bottom line: The logistical, economic, and political barriers are monumental. The cure would not only feel worse than the disease, it could destabilize the very systems modern civilization depends upon.

Adaptation Is Power

The more strategic question is not whether you can’t switch off AI.

The strategic question is how to position yourself in a world where it continues evolving.

That means:

- Learning how AI tools enhance productivity.

- Understanding their limitations.

- Building exclusive human skills such as judgment, empathy, and strategic thinking.

- Using AI as leverage rather than treating it as a rival.

Fear shrinks options.

Literacy expands them.

The fact is that technology is rarely stopped. It is steered. And so the conversation must mature from emotional reaction to structural reality.

Pulling the plug on AI may sound decisive. In practice, it is an illusion wrapped in urgency.

The future will not be shaped by those demanding shutdowns.

It will be shaped by those designing guardrails while moving forward.

FAQs

1. Why can’t governments simply ban or shut down AI development?

Governments can regulate AI within their borders, but they cannot fully ban or shut down AI development globally. AI research is distributed across private companies, universities, startups, military labs, and open source communities worldwide. Even if one country pauses development, others may continue. The decentralized nature of AI makes a complete shutdown unrealistic without unprecedented global coordination.

2. Is it technically possible to pull the plug on AI systems already in use?

It is technically possible to turn off specific AI systems within an organization.

Governments and corporations cannot realistically pull the plug on AI globally because they have embedded it into banking, healthcare, cybersecurity, logistics, and communications infrastructure. Shutting down all AI systems would disrupt critical services and create widespread instability.

3. What would a global AI pause or moratorium actually require?

A true global AI pause would require:

- International treaties with universal participation

- Monitoring of advanced computing hardware

- Enforcement mechanisms across borders

- Transparency from private and military research labs

Given geopolitical competition and economic incentives, achieving this level of cooperation would be extremely difficult.

4. Does open source AI make it harder to switch off AI?

Yes. Open source AI models allow developers worldwide to download, modify, and run systems independently. Once researchers release model weights and publish research papers publicly, no one can recall them.This makes it far more difficult to control or reverse AI progress compared to centralized technologies.

5. Why is AI considered critical infrastructure now?

Organizations are increasingly integrating AI into financial fraud detection, medical imaging, supply chain optimization, predictive maintenance, and cybersecurity systems.. These applications operate behind the scenes in everyday services. Removing AI abruptly could disrupt essential systems, which is one reason you can’t switch off AI without major economic consequences.

6. Could strict AI regulation slow down AI advancement?

Yes, regulation can slow down reckless deployment and improve safety standards. However, regulation usually shapes innovation rather than stopping it entirely. Well designed AI governance frameworks aim to balance safety, transparency, accountability, and competitiveness instead of eliminating development.

7. If we can’t switch off AI, what is the realistic solution?

The realistic approach is responsible AI governance, global dialogue, and public literacy. Instead of focusing on shutting down AI, policymakers and organizations are working on AI safety research, ethical guidelines, auditing systems, and alignment strategies. For individuals, building AI awareness and adaptive skills is more practical than hoping for a technological rollback.

Related Posts

MoltBot AI Safety Crisis Is Becoming Serious: 51 FAQs Online

AI Ethics Glossary 101: The Hidden Risks Lurking Inside Your AI

What AI Can Do for You, and Where It Fails

Why AI Feels Overwhelming to Non-Techies: And Why That’s Okay

Conclusion

Switching off AI is not a realistic strategy, it is an emotional reaction to a technological tide that has already reshaped economies, institutions, and daily life.

The real battle is not about pulling a master switch, but about shaping incentives, and governance. Its about psychological maturity around its use.

Open systems, economic gravity, and infrastructure dependence make reversal improbable.

What remains fully within human control is direction.

Adaptation, and responsible oversight are power. Disciplined stewardship and courageous clarity will decide the future, not fear.