AI Therapy Apps: 5 Mistakes You Must Avoid

Mistakes with AI therapy apps can quietly derail your mental wellness journey—if you’re not aware of them. The fact is, that AI is rapidly reshaping mental health through chatbots, virtual therapists, and always-on support tools.

These apps offer affordable, stigma-free help without appointments — and they often feel easier than traditional therapy.

But when privacy, misuse, or emotional overdependence come into play, the damage isn’t always visible right away.

Used wisely, though, AI can genuinely support your well-being. It can encourage reflection, build habits, and offer structure on tough days.

This guide breaks down the common traps — and how to avoid them — so you can make AI work for you, not against you.

Mistake #1: Blindly Trusting the App Without Research

Are All Mental Health Apps Created Equal?

You download a mental health app. It looks sleek, has glowing reviews, and claims to help you manage anxiety or track your mood.

So, you assume it’s safe and trustworthy.

But is it, really?

Here’s the thing: not all AI therapy apps are created with the same standards.

Source: Arthonsys

Many users make the first of the 5 mistakes with AI therapy apps by blindly trusting the interface — assuming that professional-grade care is baked into every feature. It’s not.

The Problem: Lack of Clinical Backing and Privacy Standards

A surprising number of mental health apps aren’t clinically validated.

Some don’t follow basic data protection laws like HIPAA (in the U.S.) or GDPR (in Europe).

That means your personal data, emotional history, and sensitive conversations may not be securely stored — or even remain private.

Worse still, some AI-powered apps give overly simplistic, even inappropriate, advice.

Imagine someone in distress turning to a chatbot for support — and receiving a generic, dismissive auto-response like “Try breathing exercises.”

That actually happened in real life. The result? Frustration and increased helplessness.

What You Can Do Instead

Don’t just scroll the app store and click ‘install.’ A few minutes of research can save you from potential emotional, ethical, or privacy pitfalls. Here’s what to look for:

Check for clinical validation. See if the app is backed by psychologists, universities, or healthcare systems. Avoid tools with vague credentials or anonymous creators.

Look for regulatory compliance. Make sure it follows laws like HIPAA (in the U.S.) or GDPR (in Europe) that protect your personal and health-related data.

Read beyond app store reviews. Look for mentions in trusted medical or mental health publications. Peer-reviewed studies or expert endorsements are even better.

Understand how the AI is trained. Transparent apps disclose how their models were developed and whether they account for bias, culture, or inclusivity.

Review privacy settings and permissions. Choose apps with clear options for data control, and avoid those that request access to unnecessary personal information like your camera or social media.

Mistake #2: Using AI Apps as a Replacement for Human Therapy

Can an App Really Understand You?

AI therapy apps have made mental health support more accessible than ever.

They’re available 24/7, never judge, and don’t require appointments.

But here’s the catch — they’re still machines.

And while they can sound compassionate, there’s a limit to what they can actually feel or comprehend.

As Dr. Lisa Feldman Barrett, a leading neuroscientist, puts it: “AI can simulate empathy, but it cannot truly understand trauma.”

That distinction matters more than most people realize.

Where Things Can Go Wrong

One of the most common — and risky — errors among the mistakes with AI therapy apps is relying on them as a substitute for real, human therapy.

Why is this a problem?

Because trauma, grief, depression, and other complex emotional states require nuance.

And nuance is where AI still stumbles.

An app may offer CBT-style prompts or recommend breathing techniques. But it won’t pick up on subtle emotional cues. It can’t tell if you’re masking suicidal ideation with humor.

It won’t gently challenge your irrational thoughts in the way a trained therapist might.

This gap creates a real risk of misdiagnosis, emotional detachment, and advice that feels tone-deaf — or worse, harmful.

How to Use AI Supportively — Not Substitutively

So what’s the better approach?

Use AI apps for what they do well: journaling prompts, guided meditations, mood tracking, or basic cognitive restructuring exercises.

But when it comes to deeply rooted issues — childhood trauma, severe anxiety, relationship breakdowns — bring in a licensed human professional.

Whether it’s in-person therapy or telehealth, human connection still remains irreplaceable.

Remember, AI can be your companion in the journey. Not your counselor.

Mistake #3: Ignoring Privacy and Data Sharing Risks

How Safe Is Your Mental Health Data, Really?

AI therapy apps often ask you to open up — about your emotions, habits, thoughts, even your voice patterns. But before you do, pause for a moment.

Have you thought about where all that deeply personal data actually goes?

One of the most overlooked among the mistakes with AI therapy apps is assuming your data is automatically safe. Unfortunately, that’s not always the case.

The Hidden Dangers of Digital Vulnerability

Many mental health apps track not just what you type, but how often you engage, your mood patterns, and sometimes even biometric details.

Some go further, analyzing voice tone or facial expressions to “understand” emotional states.

But here’s the problem — what happens to that data?

In one real case, a popular chatbot was later found to be using conversations to train its underlying algorithm — without users’ informed consent.

That means your personal struggles could be repurposed for product development, not healing.

And in the event of a breach?

This kind of data is far more damaging than, say, your email being hacked. We’re talking about your psychological profile, emotional patterns — the very core of your identity.

How to Protect Yourself

Start by reading the app’s privacy policy — yes, actually read it.

Look for terms like “end-to-end encryption,” “data anonymization,” and “third-party access.”

Choose tools that let you control what’s stored and shared.

Avoid apps that request access to social media or collect biometric data without clear justification.

Because protectin

Mistake #4: Expecting Instant Results or “One-Size-Fits-All” Solutions

Is AI Therapy a Magic Wand?

You download an AI mental health app, answer a few prompts, and wait.

Maybe you expect clarity, emotional relief, or even transformation — within days.

But nothing changes.

You feel the same. Sometimes worse.

What went wrong?

This is another common pitfall among the mistakes with AI therapy apps — expecting quick fixes from a generic tool.

It’s like installing a fitness app and expecting six-pack abs by the weekend. Doesn’t work that way.

Why AI Can’t Personalize Like a Human

AI therapy apps are built on patterns, probabilities, and large data sets.

They’re trained to recognize general symptoms and offer standard techniques — CBT prompts, mindfulness reminders, gratitude journaling.

But here’s the catch: they don’t know you.

Not your cultural background, your personal trauma, your relationship history, or your triggers.

The advice they give can sometimes feel oddly off base or too generic.

And when that happens, it’s easy to feel discouraged or even doubt the process entirely.

That emotional letdown can be dangerous if you’re already vulnerable.

The Smarter Way to Use AI Tools

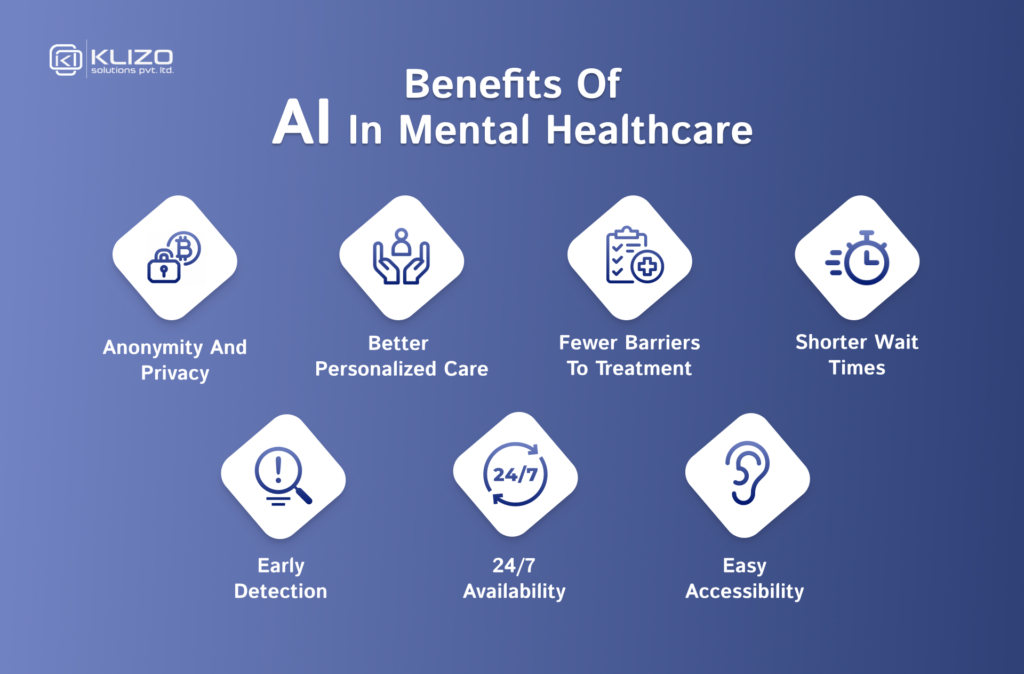

Source: Klizos

Instead of chasing instant results, shift your mindset.

Use these tools to build habits — not miracles.

Combine them with things that do move the needle over time: daily journaling, regular walks, moments of stillness, intentional reflection.

Set small, achievable goals. Track your progress weekly, not hourly.

Because true emotional well-being isn’t driven by algorithms.

It’s built with consistency.

And just like any real growth — it takes time.

Mistake #5: Not Monitoring Your Emotional Dependency on the App

Are You Leaning Too Hard on the App?

AI therapy tools are designed to support your emotional well-being — not become the center of it.

But for many users, that line starts to blur.

You open the app multiple times a day, not out of need, but habit.

However, you feel uneasy when it doesn’t load.

You haven’t spoken to a friend about your feelings in weeks.

Sound familiar?

This kind of compulsive reliance is one of the more subtle but serious among the 5 mistakes with AI therapy apps.

When Help Becomes a Crutch

It’s easy to think: “Well, at least I’m doing something for my mental health.” True. But when the app becomes your primary — or only — outlet, it may be doing more harm than good.

Source: Telecomreview.com

Apps are structured, predictable, non-judgmental.

Real people? Not always.

That contrast can make AI seem safer than opening up to a human.

But this safety can turn into emotional isolation.

Over time, it may reduce your motivation to seek real-world support — or even acknowledge when it’s needed.

A few warning signs: You hide your app usage from others.

You feel anxious when you can’t access it. You resist talking to close ones about how you really feel.

Build Boundaries, Not Walls

Use AI tools to reflect, not retreat. They’re great for tracking moods, journaling thoughts, or managing short-term stress.

But take intentional breaks. Reconnect offline.

Reflect on your emotional progress without the app.

Balance is the key. AI is a tool — not a substitute for human connection.

And no app, however advanced, can replace the comfort of being seen and heard by someone who truly cares.

4 Expert-Recommended AI Therapy Apps That Get It Right

Looking to try AI therapy but unsure where to start?

Not all apps are created equal — but some do stand out for their transparency, effectiveness, and ethical design.

Here are four expert-recommended AI mental health apps that avoid the common mistakes with AI therapy apps and offer meaningful support.

1. Wysa – Clinically Backed and CBT-Focused

Wysa is one of the most widely respected AI therapy apps in the space.

Used by healthcare providers and even integrated into some hospital systems, it’s built on evidence-based Cognitive Behavioral Therapy (CBT) techniques.

While the AI chatbot offers immediate support, the app also lets you connect with licensed therapists for deeper interventions.

Best for: Structured self-help and clinically validated guidance.

2. Reflectly – For Mindfulness and Journaling

If you’re looking for a lighter, introspective experience, Reflectly focuses on helping users cultivate daily gratitude and emotional awareness through smart journaling.

Its AI uses personalized prompts to guide reflection in a positive, engaging way — ideal for beginners.

Best for: Building a mindfulness habit and emotional clarity.

3. Youper – 24/7 Mood Tracking with Therapist Access

Youper blends AI-driven mood tracking with psychological insights.

Its biggest strength?

A hybrid model where you can also consult human therapists through the platform.

It’s a practical bridge between automation and personal care.

Best for: Tracking mental health trends and seamless escalation to human support.

4. Replika – Emotional Support with Boundaries

Replika is an AI chatbot originally built for companionship and now widely used for emotional expression and stress relief.

It’s not a therapist, but it can be a helpful tool for processing feelings.

Just remember: Use it as a supplement, not a substitute.

Best for: Conversation practice, emotional processing, and stress relief.

Remember: even with expert picks, always do your homework.

Check privacy policies, clinical affiliations, and whether the app aligns with your specific needs.

Ideal Use Cases of AI Therapy Apps

| App Name | Key Benefits | Ideal Use Cases | Future Evolutions |

|---|---|---|---|

| Wysa | – Clinically backed – Uses CBT methods – Employed in healthcare systems | – Users needing structured self-help – Early-stage therapy seekers – Those preferring evidence-based tools | – Deeper AI-human therapist integration – Personalized recovery journeys via AI learning |

| Reflectly | – Promotes mindfulness – AI-guided journaling – Emotionally engaging UI | – Beginners to mental wellness – Habitual gratitude practice – Daily emotional check-ins | – Adaptive mood-based journaling prompts – Voice-based journaling and emotion detection |

| Youper | – Real-time mood tracking – Combines AI with therapist access – Personalized behavioral insights | – Users needing frequent monitoring – Those transitioning to or from therapy – Individuals managing anxiety/depression | – Advanced mental health analytics – Smart escalation alerts to therapists |

| Replika | – AI companion for emotional expression – Safe space for venting – Learns your conversational style | – Users seeking non-judgmental conversations – Emotional regulation practice – Stress or loneliness relief | – Emotionally aware AI avatars – Integrations with AR/VR for immersive support |

FAQs

What are AI therapy apps, and how do they work?

AI therapy apps use algorithms to provide mental health support through features like journaling, CBT exercises, and mood tracking. They simulate conversation or guidance based on psychological frameworks.

Can AI therapy apps replace seeing a real therapist?

No. They can complement therapy but cannot replace the depth, empathy, or diagnosis a licensed human therapist provides. Use them as a support tool—not a substitute.

Are AI mental health apps safe to use?

They can be, but only if you check for privacy standards like HIPAA/GDPR compliance. Always review the app’s data policy before sharing personal information.

What are the risks of relying solely on AI mental health tools?

Over-reliance may lead to emotional detachment, generic advice in complex situations, or neglecting real human support. Balance is essential for meaningful results.

Do AI therapy apps give personalized advice?

Not truly. Most provide generalized suggestions based on patterns, not your unique life context. Human therapists can adapt; AI still can’t.

How do I choose a trustworthy AI therapy app?

Look for clinical backing, therapist access, end-to-end encryption, and transparent data use policies. Trusted platforms and expert reviews help too.

What are signs of emotional dependency on an AI app?

If you feel anxious when the app is down or avoid talking to people about your feelings, that’s a red flag. Apps should support, not isolate.

Can AI therapy apps deliver quick mental health results?

No. Like fitness or diet apps, the benefits take time and consistency. Don’t expect instant emotional transformation overnight.

Related Posts

AI Mental Health Apps: Breakthrough or Gimmick?

Explore whether these apps are truly transforming mental wellness or simply riding the tech hype.

We weigh the science, features, and concerns behind the digital therapy trend.

Before You Download an AI-Based Mental Health App—Read This

Not all AI therapy tools are created equal—some help, others might mislead.

This guide uncovers what you must know before trusting your mind to an algorithm.

AI Mental Health Apps: The Calm Everyone Wants

From 24/7 chatbots to mood trackers, AI is becoming the new therapist-on-demand.

Discover how these apps are offering instant comfort—but is it enough?

Top 5 AI Apps for Mental Focus

Need to cut through the mental fog? These apps promise sharper thinking and better focus.

We review the top AI tools designed to boost clarity, productivity, and peace of mind.

Conclusion

AI mental health tools are here to stay. They offer round-the-clock support, emotional check-ins, and structured self-help strategies grounded in psychological science.

But their real power lies in how you use them. When used responsibly and in combination with human support, they can genuinely enhance your emotional well-being.

That said, it’s easy to fall into common traps — like trusting apps without doing your research, expecting them to replace real therapy, overlooking privacy risks, hoping for quick results, or becoming too emotionally reliant on digital companions. These subtle missteps can undermine your progress instead of supporting it.

The key is awareness. Use these tools as companions, not crutches. Let them guide reflection, not replace connection. And above all, remember this: you’re not alone.

With the right balance of human insight and digital support, AI can be a meaningful ally on your path to better mental health.