Ensuring Robust AI Security: The Key to Resilient AI Systems

Robust AI security is crucial for maintaining the integrity of modern AI systems. As AI continues to revolutionize industries like healthcare, finance, and security, its vulnerabilities can become a serious concern.

Capgemini Research Institute’s survey of 1,000 organizations revealed that over 90% faced a cybersecurity breach in 2023, up from 51% in 2021.

This article will explore the most effective strategies to ensure AI systems remain resilient and secure, from defending against adversarial attacks to preventing data manipulation.

By the end, you’ll be equipped with practical knowledge on how to strengthen your AI systems and avoid costly pitfalls.

Let’s dive in and protect the future of AI.

What is Robust AI?

Robust AI refers to artificial intelligence systems that are reliable, resilient, and adaptable in real-world scenarios.

Robust AI security ensures that AI performs well under uncertain, changing, or adversarial conditions without failure or unintended consequences.

Also, robust AI must handle errors gracefully, resist manipulation, and maintain accuracy even in unpredictable environments.

Key Components of AI Robustness

Robust AI security is built on several pillars.

Think of it like constructing a skyscraper—without a solid foundation, it crumbles. The key components include:

- Accuracy – AI accuracy implies that it should make correct predictions most of the time.

- Generalization – It must work well on unseen data, not just training data.

- Adaptability – The ability to learn and adjust to new conditions.

- Safety – AI should avoid harmful mistakes and be resilient to attacks.

The Need for Robust AI: Real-World Scenarios

AI isn’t just a fancy gadget; it’s shaping industries and transforming daily life. Ensuring its robustness (or robust AI security) is essential to prevent catastrophic failures. Here’s where resilience matters most:

- Self-Driving Cars – AI must react instantly to unpredictable road conditions, such as sudden pedestrian crossings, erratic driver behavior, or adverse weather. A single miscalculation could lead to fatal accidents, making real-time decision-making crucial.

- Healthcare AI – Diagnostic models must accurately analyze medical data to prevent misdiagnoses that could risk lives. Errors in detecting diseases like cancer or heart conditions can delay treatment, leading to severe consequences.

- Financial Systems – AI-driven trading platforms need to resist market manipulation and fraud attempts. A flawed algorithm can trigger flash crashes or enable exploitation by bad actors, destabilizing global markets.

Even a small failure in these areas can lead to devastating consequences. That’s why AI robustness isn’t optional—it’s a critical necessity for safety, reliability, and trust in modern technology.

Let’s explore these in more detail.

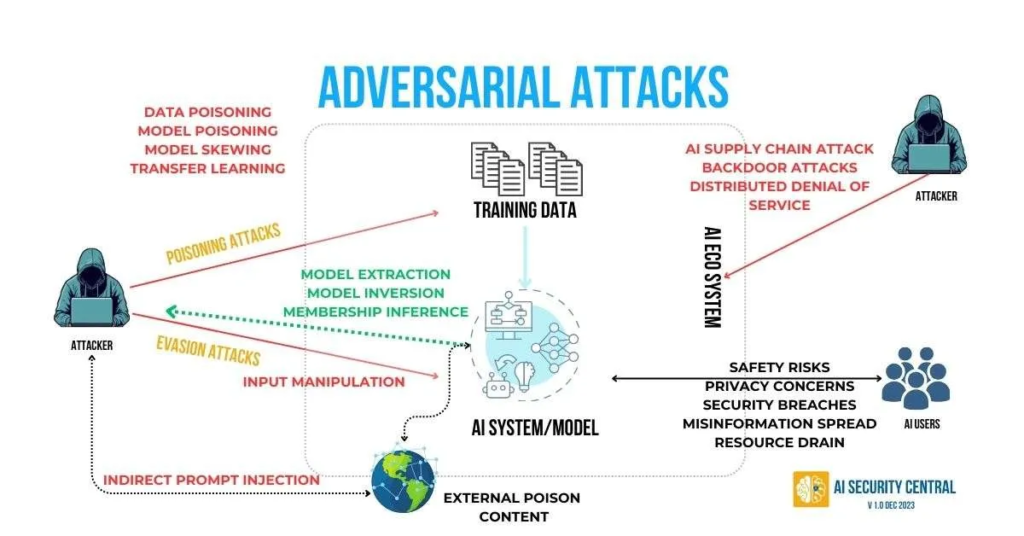

Adversarial Attacks: Can AI Be Tricked?

Image Source: AISecurityCentral

AI models can be tricked with carefully crafted inputs. Adversarial AI attacks involve subtle changes to data, such as adding small noise to an image, which causes the AI to misinterpret the information. This is known as an adversarial attack.

Why is this dangerous?

- Self-driving cars: A slight alteration to a stop sign could cause the AI to misinterpret it as a speed limit sign, potentially leading to accidents and putting lives at risk.

- Facial recognition systems: Attackers can modify facial features to fool these systems, causing misidentifications that could result in wrongful arrests or security breaches.

- Medical AI: Adversarial attacks can manipulate medical data, leading to misdiagnoses of life-threatening conditions, putting patients’ lives in jeopardy.

To combat these threats, AI systems must be designed to detect and counter adversarial attacks through more robust training techniques and defenses.

Robustness in Machine Learning Models

AI models often suffer from two major problems:

Overfitting:

This occurs when the model becomes too tailored to the training data, memorizing it instead of identifying general patterns.

As a result, the AI performs well on familiar data but struggles with new, unseen inputs.

Overfitting is detrimental to the model’s ability to generalize and make accurate predictions in real-world scenarios.

Underfitting:

In this case, the model fails to learn enough from the training data, resulting in poor performance even on known data.

The model may be too simplistic, unable to capture the complexities and nuances necessary for accurate predictions.

To strike a balance, developers use techniques like:

Data Augmentation:

By artificially expanding the training data, this technique helps the model generalize better.

It introduces variations such as rotations, translations, or noise, enabling the model to handle diverse real-world inputs and enhancing its robustness.

Regularization:

This method penalizes overly complex models, encouraging simplicity and preventing overfitting.

Regularization techniques, like L1 and L2, help constrain the model’s capacity, ensuring it doesn’t memorize the training data and improving its performance on new inputs.

Adversarial Training:

In adversarial training, the model is exposed to intentionally perturbed inputs, designed to challenge its predictions.

This helps the AI become more resilient to slight variations and adversarial attacks, improving its ability to handle complex, real-world data.

Testing and Stress-Testing AI Systems

Would you drive a car that hasn’t been tested for crashes? Of course not! Similarly, AI must undergo rigorous stress tests to ensure its reliability and safety.

- Adversarial training – This involves exposing AI to various attacks, such as misleading data or manipulative inputs, to identify vulnerabilities. By doing so, the AI becomes more resistant to these external threats, improving its ability to perform in real-world, unpredictable scenarios.

- Simulation environments – Creating controlled, real-world-like situations allows for a comprehensive evaluation of AI behavior. These environments help test how AI responds to diverse, dynamic conditions, such as unusual inputs or emergencies, ensuring it’s adaptable and robust.

- Robustness benchmarks – These standardized tests measure the AI’s strength, consistency, and performance under pressure. By assessing how well AI functions under various conditions, it becomes easier to identify potential weaknesses and make improvements, ensuring the AI remains reliable across different applications.

The Role of Humans: Human-in-the-Loop AI

Human-in-the-Loop (HITL) AI refers to systems where human input and oversight are integrated into AI decision-making processes.

In this approach, humans are involved in guiding, training, or validating AI models, ensuring that the technology operates accurately and ethically.

HITL is especially vital in tasks that require judgment, context, or ethical considerations.

Why is it Important?

HITL AI is crucial because it ensures that AI systems remain aligned with human values, ethics, and critical thinking.

While AI excels at processing data and finding patterns, humans bring context, intuition, and moral understanding, helping to catch errors and make more informed, responsible decisions.

Contextual Understanding:

AI, while capable of analyzing large datasets and offering recommendations, lacks the ability to truly understand context as humans do.

For instance, a doctor can recognize the subtleties of a patient’s medical history that might influence a diagnosis, something AI may overlook or misinterpret.

Critical Decision-Making:

In situations where AI algorithms operate autonomously, such as in self-driving cars, humans act as safety nets.

If an autonomous system malfunctions or encounters an unexpected situation, a human driver can take over to prevent accidents and ensure safe outcomes.

Ethical Oversight:

AI systems can sometimes produce decisions that may lack ethical consideration due to their reliance on data patterns.

Human involvement ensures that AI’s outputs are checked for fairness, empathy, and moral correctness, reducing the risk of biased or harmful decisions.

Explainability and Transparency: Why They Matter

Would you trust a doctor who refuses to explain a diagnosis? AI should be the same. Explainable AI (XAI) makes decisions understandable.

Benefits of AI transparency:

- Helps users trust AI outputs.

- Identifies and fixes biases and weak spots.

- Aids in compliance with regulations.

Bottom line: A black-box AI is a risky AI. Transparency builds trust.

AI Fault Detection: Catching Problems Early

AI fault detection systems play a vital role in ensuring smooth AI operation by catching issues before they escalate.

- Automated Monitoring: These systems continuously observe AI’s performance, looking for any unusual patterns or behaviors that may indicate a fault. It allows for early identification and timely responses, minimizing potential damage.

- Self-Diagnosing AI: By integrating diagnostic capabilities, AI systems can identify and report their own errors, reducing the need for constant human intervention.

- Error Prediction: Predictive algorithms can forecast potential malfunctions by analyzing historical data trends and system behavior, preparing for any impending issues.

- System Alerts: Automated alerts notify human operators of discrepancies, ensuring issues are addressed swiftly, maintaining AI efficiency and minimizing downtime.

Tackling Model Drift: Keeping AI Updated

Model drift occurs as AI systems become outdated when the real-world data they rely on evolves.

Image source: Aporia.com

- Continuous Learning: AI models with continuous learning capabilities adjust their algorithms as new data comes in, helping them stay relevant and effective.

- Retraining Cycles: Scheduled retraining sessions enable models to incorporate recent data, correcting any degradation in performance due to changing trends.

- Adaptability to New Data: Models are designed to adjust to fresh inputs, enhancing their ability to evolve with the environment.

- Performance Monitoring: Regularly evaluating AI’s output against current benchmarks ensures models remain accurate and aligned with real-world conditions, preventing performance dips due to drift.

AI System Validation: Testing Before Deployment

Before deploying AI systems in real-world applications, it’s crucial to validate them to ensure they function as expected and meet desired standards.

- Simulated Environments: AI is first tested in controlled, simulated environments that mimic real-world scenarios, allowing developers to observe performance, identify flaws, and fine-tune the model without risk.

- Real-World Trials: Conducting trials in actual environments helps assess the AI’s adaptability, reliability, and efficiency when interacting with dynamic, unpredictable variables.

- Stress Testing: AI systems are exposed to extreme conditions to test their resilience under pressure, ensuring robustness in unforeseen circumstances.

- User Feedback: Gathering feedback from actual users provides valuable insights into AI’s practical performance, highlighting potential improvements or adjustments needed for better real-world application.

Red Teaming AI: Battling Against Threats

Red teaming is a cybersecurity practice where experts simulate realistic attacks on AI systems to identify vulnerabilities and weaknesses.

This proactive approach helps assess how well an AI model can withstand real-world threats, such as adversarial inputs, data manipulation, or spoofing attempts.

By mimicking potential attackers, red teamers can uncover security flaws, allowing developers to fortify the system before deployment, ensuring its resilience against exploitation or failure in actual use.

Testing Facial Recognition Against Spoofing Attempts

Attackers increasingly use photos, videos, or masks to spoof facial recognition systems and deceive the AI, even though these systems are widely used in security.

Red teaming simulates such attacks to test how well the system can differentiate between real and fake images.

Experts use high-quality prints, 3D masks, and digital manipulations to mimic real-world spoofing methods.

By doing so, they can identify weaknesses in the facial recognition algorithms and help improve their accuracy and robustness, ensuring the system remains secure against potential identity theft or unauthorized access attempts.

Evaluating AI Chatbots for Misinformation Vulnerabilities

Companies widely use AI chatbots for customer service, content generation, and personal assistance. However, they can be susceptible to spreading misinformation if not carefully monitored.

Red teamers simulate scenarios where chatbots might be fed false or misleading data and evaluate their ability to recognize and avoid propagating these inaccuracies.

By testing the chatbot’s responses to misinformation, experts can identify vulnerabilities in its language processing algorithms.

This helps improve the AI’s ability to filter out unreliable information, ensuring its

Self-Healing AI: The Future of Resilience

Imagine AI that fixes itself when it encounters errors. That’s self-healing AI.

Uses Reinforcement Learning to Adapt to Failures:

Self-healing AI relies on reinforcement learning algorithms, where the system learns from past mistakes.

It receives feedback on its performance, enabling it to adapt and improve its responses when encountering similar issues in the future, minimizing downtime.

Identifies Weaknesses and Automatically Corrects Them:

The AI continuously scans its own performance for weaknesses or inconsistencies.

When the system detects an error, it triggers a self-correction process, updates its models or parameters, and resolves the issue, ensuring uninterrupted functionality.

Enhances System Stability Over Time:

As the AI interacts with different environments and datasets, it becomes better at recognizing potential risks and proactively managing them.

This ongoing self-improvement boosts the overall stability and robustness of the system, allowing it to handle unforeseen challenges more effectively.

Reduces Dependence on Human Intervention:

Self-healing AI minimizes the need for manual oversight by automatically handling common issues.

By doing so, it frees up human resources to focus on higher-level tasks, while ensuring the AI system operates smoothly and efficiently with minimal intervention.

AI Robustness Strategies

AI robustness strategies are essential for ensuring AI systems remain reliable and adaptable.

Key approaches include adversarial training, continuous learning, and data augmentation to enhance AI security and performance.

Explainable AI (XAI) improves transparency, while automated monitoring detects system failures early, ensuring robustness.

Together, these strategies enhance AI’s resilience, accuracy, and trustworthiness in real-world applications.

Challenges and Strategies

| Challenge | Solution | Benefit |

|---|---|---|

| Adversarial attacks | Adversarial training | Increases resilience |

| Model drift | Continuous learning | Maintains accuracy |

| Overfitting | Data augmentation | Improves generalization |

| Lack of transparency | Explainable AI (XAI) | Builds user trust |

| System failures | Automated monitoring | Detects errors early |

The Future of AI Robustness: Trends and Challenges

As AI continues to transform industries, ensuring its robustness—its ability to function reliably in diverse, unpredictable environments—becomes increasingly crucial.

The future of AI robustness focuses on creating systems that remain resilient and adaptable while maintaining security and ethical standards.

Emerging trends like self-healing AI, real-time risk management, and hybrid AI-human decision-making are addressing these challenges, pushing the boundaries of what AI can achieve.

However, with this rapid evolution comes the challenge of keeping AI systems secure and flexible in a constantly changing world.

AI must not only handle real-time data and complex tasks but also anticipate and adapt to new, unforeseen risks. Achieving this balance requires continuous improvement in AI algorithms, ethical frameworks, and human collaboration.

Key Trends: Robust AI Security

Self-Healing AI:

AI systems that can autonomously detect and correct faults, ensuring continued performance without human intervention. This reduces downtime and maintains system reliability.

Real-Time AI Risk Management:

AI systems are being developed to monitor and assess risks as they arise. They provide timely interventions to prevent adverse outcomes..

Hybrid AI-Human Decision-Making:

AI systems work alongside humans to make critical decisions, ensuring that both artificial and human intelligence strengths are leveraged for optimal outcomes.

Increased Adaptability:

The future will see AI models becoming more flexible. They will quickly adapt to new data and environments without compromising performance or security..

Security and Privacy Challenges:

As AI integrates into sensitive areas, safeguarding data privacy will become a key challenge. Preventing malicious manipulation will also be crucial for developers and stakeholders.

FAQs

What is robust AI security?

AI robustness refers to an AI system’s ability to handle unexpected inputs, resist attacks, and continue to function reliably under varying conditions. It ensures that the system remains stable and secure even when faced with challenges or changing environments.

Why does model drift occur?

Model drift occurs when the real-world data that an AI system was trained on evolves over time, causing the AI’s predictions or decisions to become less accurate. Robust AI security addresses model drift through strategies like continuous learning and regular model updates to maintain accuracy.

How can we protect AI from adversarial attacks?

AI can be protected from adversarial attacks through techniques such as adversarial training, which exposes the model to adversarial examples during training, anomaly detection to identify unusual inputs, and the development of secure AI architectures to fortify systems against manipulation.

What is red teaming in AI?

Red teaming is a cybersecurity technique in which security experts simulate attacks on AI systems to uncover vulnerabilities and weaknesses. It helps in assessing the security of AI models and identifying potential threats before they can be exploited in real-world applications.

How does self-healing AI work?

Self-healing AI works by detecting errors within its system and automatically adjusting its models to correct these issues without the need for human intervention. This adaptive ability ensures that the AI system can maintain functionality even when facing unforeseen challenges.

What’s the role of explainability in AI robustness?

Explainability in AI robustness is crucial as it allows users to understand how and why AI systems make decisions. It also helps developers identify and address any biases or errors within the system, ensuring the AI remains transparent, trustworthy, and fair.

Is human oversight necessary in AI security?

Yes, human oversight is vital in AI security. While AI systems can handle many tasks autonomously, humans provide ethical guidance, help ensure that decisions align with societal values, and can catch errors or biases that the AI might overlook.

Related Posts

Fair AI Content for Trust: A Guide to Ethical AI Systems

Ethical AI systems prioritize fairness, transparency, and accountability, ensuring that content generated by AI respects human values. This creates a trustworthy environment where users can rely on AI’s decisions without fear of bias or manipulation.

Preserving Human Control in AI-Assisted Decision-Making

Maintaining human oversight in AI decision-making ensures that technology supports, rather than replaces, human judgment. This prevents AI from making autonomous decisions that could be harmful or misaligned with societal values.

How AI Content Earns Our Trust One Step at a Time

Building trust in AI is a gradual process, achieved through consistent, transparent actions and ethical content creation. Each step toward clarity and fairness strengthens the relationship between AI systems and their users.

Understanding AI Transparency for Better Trust and Accountability

AI transparency ensures that users can understand how decisions are made and why certain actions are taken. This openness fosters trust and allows for accountability, ensuring AI operates responsibly within society.

Conclusion

In conclusion, robust AI security is crucial to the development of resilient AI systems. As AI continues to shape industries and everyday life, its security must be prioritized to prevent vulnerabilities, such as adversarial attacks, model drift, and system failures.

By incorporating strategies like adversarial training, data augmentation, and explainability, AI systems can withstand real-world challenges while maintaining transparency and reliability.

Additionally, innovative solutions like self-healing AI and human-in-the-loop frameworks pave the way for even greater resilience. Testing, validation, and red teaming further strengthen these systems, ensuring they remain adaptable and secure in a fast-evolving landscape.

The future of AI robustness will depend on continuous innovation, proactive defenses, and a commitment to robust AI security, ensuring a safer and more reliable technological world.