Why Trustworthy AI Content Is a Game-Changer in 2025

Artificial intelligence has become the heartbeat of modern innovation. From writing essays to suggesting what we should watch next, AI is everywhere. But here’s the catch: everything online is not trustworthy AI content.

Not all AI-generated content is reliable, ethical, or even safe. That’s where trustworthy content steps in as a game-changer in 2025.

Why does this matter now more than ever? According to Capgemini, with 73% of global consumers trusting generative AI content the stakes couldn’t be higher.

But trust isn’t given—it’s earned.

Let’s dive into why trustworthy AI is crucial and how it’s shaping the future.

What Is Trustworthy AI Content?

At its core, trustworthy AI content is like a good friend: reliable, honest, and fair. It doesn’t just churn out answers; it ensures those answers are ethical, accurate, and unbiased.

Trustworthy AI content upholds high standards of fairness, ensuring that the decisions made by algorithms do not favor one group over another. It prioritizes privacy, ensuring that user data is handled securely and ethically, preventing misuse or exploitation.

Imagine an AI tool helping doctors diagnose illnesses. If the AI isn’t transparent or reliable, lives are at stake.

Trustworthy AI ensures that these systems are accountable and work as intended.

Key Characteristics of Trustworthy AI Content

Trustworthy AI content is characterized by transparency, fairness, robustness, privacy, and accountability.

These key principles ensure that AI systems are not only reliable and ethical but also safeguard user interests and foster long-term trust.

Transparency: Crucial for Trustworthy AI Content

Transparency in AI is like following a recipe—users can see every step that leads to the final outcome.

By making decision-making processes visible and easy to interpret, AI fosters trust and confidence.

Whether it’s a healthcare diagnosis or a credit approval, transparency ensures users understand why certain decisions are made.

This openness is vital in preventing misunderstandings and building faith in AI systems, especially in areas where precision and fairness are critical.

Fairness: Ethical and Balanced Outcomes

Fairness in AI ensures that AI treats everyone equally, without favoritism or bias.

Imagine a judge who makes decisions based solely on facts—AI systems should work the same way.

By implementing safeguards against bias, developers can ensure AI outcomes are ethical and inclusive.

This characteristic is especially important in areas like recruitment, lending, and education, where impartiality matters most.

Fairness in AI creates a level playing field. Also, fair AI content builds trust across diverse groups of users.

Robustness: Built to Endure

Robustness ensures AI systems are secure, reliable, and resistant to failures or attacks. Think of it as a fortress—built to withstand challenges and safeguard its core.

Robust AI security is resilient, and it can handle errors gracefully and adapt to changing conditions, making it dependable even in high-stakes environments like healthcare or finance.

Error Recovery: Robust AI systems can quickly recover from errors without disrupting the overall functionality. Just like a safety net, they ensure minimal damage when something goes wrong.

Scalability: These AI systems are designed to scale effectively. For instance, AI enhances content scalability. It can also handle increasing loads or complexity without sacrificing performance. They adapt seamlessly as demands grow, much like a well-oiled machine.

Resistance to Attacks: Robust AI systems are built to defend against cyberattacks, preventing malicious interventions that could compromise integrity. Think of it as a secure vault, safeguarding valuable data and ensuring user trust.

By prioritizing resilience, developers create systems that users can rely on for consistent and accurate performance, no matter the circumstances.

Privacy: Respecting Personal Boundaries

Privacy in AI is akin to locking your diary away—data is protected and used ethically.

This ensures individuals maintain control over their personal details. AI systems that prioritize privacy encrypt sensitive information and seek user consent before accessing data.

Trustworthy AI upholds the highest standards of data governance, creating a safe digital environment.

By respecting privacy, AI systems build user confidence and encourage more open engagement, especially in areas like healthcare and personal finance.

Accountability: Enhancing Trustworthiness of AI Content

Accountability in AI is like having a clear set of rules for a team—everyone knows their role and takes ownership.

When something goes wrong, trustworthy AI systems have mechanisms to identify the issue and take corrective action.

Developers and organizations remain responsible for the impact of their creations, ensuring transparency and improvement.

Accountability reassures users that their concerns will be addressed, fostering a sense of security and trust in the technology’s long-term reliability.

Examples in Action

- Personalized Learning Platforms: AI tutors customize lessons without bias, empowering students globally.

- Ethical Recommendation Systems: Platforms recommend content without pushing harmful or misleading narratives.

- Healthcare AI: Diagnoses and treatment plans grounded in data, fairness, and precision.

Principles of Trustworthy AI

Trustworthy AI is built on principles that act like a compass, guiding its ethical use.

At the heart of it lies human agency—AI serves as a helpful assistant, not a decision-maker. Human control in AI decision-making ensures AI supports, not replaces, human judgment in shaping outcomes.

Think of a doctor using AI to confirm a diagnosis but still relying on their expertise for the final call. It’s a partnership, not a takeover.

Equally important is AI robustness, ensuring AI systems are resilient.

Whether it’s a chatbot handling a surge of users or a cybersecurity system fending off attacks, robust AI can stand strong.

And privacy is non-negotiable.

Ethical data use ensures users feel like their information is in a vault, accessed only with permission.

Transparency and fairness complete the circle. When algorithms explain their choices—like why a product is recommended—it builds trust.

Fairness ensures inclusivity, steering clear of bias. As Andrew Ng aptly put it, “AI is the new electricity,” illuminating a future that’s bright and equitable.

To truly understand trustworthy AI content, we need to break it down into its foundational principles.

Breakdown of Principles of Trustworthy AI

| Principle | What It Means | Example |

|---|---|---|

| Human Agency and Oversight | AI assists but doesn’t replace human decision-making. | Doctors using AI to confirm diagnoses, not make final decisions. |

| Technical Robustness | Systems must be resilient and secure against errors. | AI chatbots functioning safely even during unexpected spikes in traffic. |

| Privacy and Data Governance | User data is ethically collected and used responsibly. | Apps encrypting data and ensuring user consent for data sharing. |

| Transparency | AI decision-making is explainable and easy to understand. | Algorithms showing why they recommend certain products or services. |

| Fairness | Ensures inclusivity and avoids discrimination. | AI recruitment tools assessing candidates based on skills, not gender bias. |

These principles aren’t just guidelines; they’re the building blocks of a future where AI can be trusted.

Challenges in Building Trustworthy AI Content

While the vision is clear, the path isn’t easy. Building trustworthy AI content comes with its share of challenges.

Disinformation and Bias

AI can amplify misinformation faster than we can blink. For instance, a flawed dataset can teach AI to reinforce stereotypes or spread false narratives.

This leads to unethical outcomes, affecting industries like hiring or news distribution.

The challenge is ensuring datasets are clean, diverse, and truly representative.

After all, no one wants an algorithm with a hidden agenda.

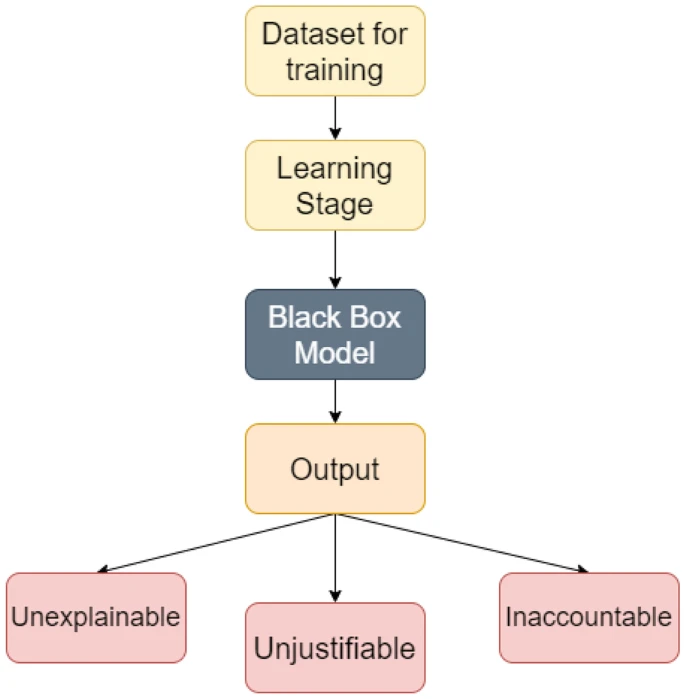

Lack of Transparency in AI Content

The “AI black box” refers to systems whose decision-making processes are not transparent or easily understood by humans. This lack of clarity makes it difficult to interpret how and why AI arrives at certain conclusions or outputs.

Image Source: SpringerNature

How can we rely on AI if we don’t know how it works?

Imagine using a GPS that hides its route logic—it’s unnerving! Transparency ensures users can understand and question AI decisions, making trust a two-way street.

Without this, skepticism naturally grows.

Safety Concerns

Hallucinations in large language models are like a loose cannon.

Hallucinations in Large Language Models (LLMs) refer to the generation of factually incorrect, fabricated, or nonsensical information.

AI safety first is crucial because some errors can involve false facts, non-existent sources, or contradictions. Despite their inaccuracy, the model often presents these responses confidently.

These unpredictable responses can lead to misinformation or even harmful advice.

Ensuring models stay accurate, reliable, and aligned with human values is vital. The stakes are high, especially in healthcare or legal industries where mistakes can be costly.

Ethical Concerns

AI’s environmental impact and the risk of job displacement spark significant debates.

Is the energy used in training models worth it?

Can displaced workers find alternatives? Striking a balance between innovation and social responsibility remains crucial to avoid fostering resentment or inequality.

Industry Concentration

A handful of corporations dominating AI leads to an uneven playing field.

With power centralized, smaller players and ethical concerns often get sidelined.

Competition fosters innovation, but monopolies can stunt growth and fairness, making democratizing AI access a pressing issue.

Strategies to Build Trustworthy AI Content

Building trustworthy AI content requires strategies like transparency, fairness, and robust security measures. These steps ensure AI systems operate ethically, safeguard user data, and provide clear, reliable outcomes.

Explainability and Transparency

Would you trust a chef who hides their recipe? AI works the same way—transparency builds trust.

The reason why explainability is crucial for trustworthy AI is because it helps users understand why an AI made a decision. Eventually, skepticism fades.

For example, financial AI tools explaining why a loan application is approved instills confidence.

As AI expert Tim O’Reilly said, “Transparency is the first step toward accountability.”

Fairness and Bias Mitigation

Bias in AI can harm society, but rigorous testing and diverse datasets act as safeguards. It’s like proofreading a manuscript to catch hidden errors.

For instance, companies like LinkedIn now use algorithms that reduce gender bias in job recommendations. Fairness ensures AI works for everyone, not just a select few.

Robustness

AI must remain reliable under pressure. Imagine a self-driving car that falters during a storm—it’s unacceptable.

Robust AI systems, like Tesla’s, undergo stress tests to ensure resilience. Robustness is about preparing for the unpredictable while maintaining safety and efficiency.

Privacy and Data Governance

AI systems should guard user data like a bank protects its vault. Encryption and anonymization ensure sensitive information stays secure.

For instance, Apple’s focus on on-device data processing prioritizes user privacy.

As Edward Snowden noted, “Arguing that you don’t care about privacy because you have nothing to hide is like saying you don’t care about free speech because you have nothing to say.”

Education and Awareness

Understanding AI is empowering, whether you’re a developer or a user. Think of it as learning to drive; you need to know the controls and limitations. This is where AI education for non-tech audiences plays a crucial role.

It helps demystify AI concepts for everyday users, making them aware of its benefits and potential risks. Campaigns like Google’s AI Principles aim to educate the public on ethical AI use.

Knowledge fosters trust and reduces fear of the unknown, ensuring that individuals can make informed decisions while interacting with AI-powered tools.

Accountability and Liability

Who takes responsibility when an AI makes a mistake?

It’s essential to establish accountability frameworks. For example, the European Union’s proposed regulations on AI emphasize clear lines of responsibility.

Accountability ensures AI can be trusted, knowing there’s a system in place to address any mishaps.

User-Centric AI Content Design

AI should be designed with users in mind, not just businesses.

Think of a smart home assistant that adjusts settings to your needs and preferences.

When AI solutions meet real-world requirements, trust flourishes.

Companies like Amazon are constantly improving Alexa to be more intuitive and responsive to users.

Continuous Improvement and Feedback

AI doesn’t stop learning, and neither should we.

Continuous improvement through feedback loops ensures AI remains relevant and trustworthy.

Platforms like Google’s AI ethics board collect user input to refine models. Feedback helps in enhancing AI systems, making them more reliable and aligned with evolving needs.

Current Measures to Enhance AI Trustworthiness

The world isn’t sitting idle. Governments, organizations, and tech leaders are taking steps to ensure trust in AI.

Frameworks and Guidelines

Governments and international bodies are creating frameworks to promote trustworthy AI content. The European Union’s Ethics Guidelines for Trustworthy AI focus on ensuring that AI systems are transparent, fair, and accountable.

The IEEE Global Initiative on Ethics of Autonomous Systems sets comprehensive global standards that guide the development of ethical AI technologies.

These frameworks are essential for aligning AI development with human values and addressing societal concerns.

As the EU’s guidelines emphasize, “AI must serve humanity’s best interests.”

Certification and Auditing

Independent audits play a critical role in maintaining trust in AI.

Just like in other industries, AI systems must undergo thorough certification processes to ensure they comply with ethical standards.

This can include checking for biases, ensuring transparency in decision-making, and verifying the security of user data. Audits act as a safeguard, preventing the misuse of AI.

As with any product, a quality check guarantees that AI serves its purpose ethically and effectively, building confidence among users.

Industry Efforts

Tech companies are stepping up their game in making AI more transparent and ethical.

By adopting Explainable AI (XAI) practices, they aim to create systems where users can understand how AI arrives at its decisions.

Additionally, companies are focusing on bias mitigation strategies to reduce unfairness in AI outputs.

These efforts ensure AI technologies are not only reliable but also accessible to a diverse group of users.

As Sundar Pichai, CEO of Google, once said, “AI is one of the most profound things we’re working on as humanity.”

Collaboration with Academia

To create ethical AI, the tech industry is increasingly collaborating with academic institutions.

Research institutions contribute valuable insights into how AI systems can be made fairer and more transparent. Universities and think tanks are working on developing new methods to detect and correct biases in AI models.

This partnership helps bridge the gap between theory and practice.

In the words of Tim Berners-Lee, “The web does not just connect machines, it connects people.”

Legal and Regulatory Oversight

In addition to frameworks, governments are pushing for stronger legal oversight of AI technologies.

Laws are being enacted that govern how AI interacts with personal data and decision-making processes.

These regulations ensure that AI does not infringe on people’s rights or freedoms. For instance, the GDPR in Europe is a key step toward ensuring that AI respects privacy.

As the saying goes, “Laws are like cobwebs, which may catch small flies, but let wasps and hornets break through.”

Industry Certifications for Trustworthiness

Many organizations are setting up certification programs to identify and validate trustworthy AI systems.

These certifications, similar to ISO standards, provide a badge of trust to companies that follow ethical guidelines in AI development.

They also reassure consumers and stakeholders that the AI systems they are interacting with meet high standards of fairness, privacy, and accountability.

As AI becomes more prevalent, these certifications will play a vital role in distinguishing trustworthy technology from the rest.

User Feedback Integration

Incorporating user feedback into AI design is another way to improve trustworthiness.

Companies are now focusing on gathering insights from users to identify and resolve issues, such as biases or inaccurate outputs.

This collaborative approach ensures that AI systems evolve based on real-world experiences.

After all, as Henry Ford said, “If I had asked people what they wanted, they would have said faster horses.”

By involving users in the development process, AI can better serve its audience and build stronger trust.

Future Directions and the Roadmap

The journey doesn’t end here. Trustworthy AI content will evolve, guided by key players and policies.

Role of Policymakers

Governments play a pivotal role in shaping the future of trustworthy AI.

By developing adaptive regulations, they can address emerging risks like disinformation and privacy concerns.

Image Source: CyberPilot

For example, the EU’s General Data Protection Regulation (GDPR) is a step in the right direction. Policymakers must anticipate changes in AI technology and create guidelines that protect citizens while fostering innovation.

As Albert Einstein once said, “The world is in greater peril from those who tolerate or encourage evil than from those who actually commit it.”

Collaboration Across Sectors

AI development shouldn’t be siloed.

Demis Hassabis, CEO of DeepMind, shares, “The future of AI depends on the fusion of human intelligence and machine learning. Collaboration is where the power lies“.

Academia, the tech industry, and governments must collaborate to create ethical AI frameworks.

By pooling resources and expertise, these sectors can create comprehensive policies and systems that address societal concerns.

For example, initiatives like the Partnership on AI bring together diverse stakeholders to ensure AI is beneficial to all.

User Education

As Daniel J. Boorstin said, “The greatest enemy of knowledge is not ignorance, it is the illusion of knowledge.”

Educating users is essential for building long-term trust in AI.

By providing clear guidelines and resources, we empower users to make informed decisions about their interaction with AI technologies.

Platforms like Coursera and edX offer courses to help individuals understand the implications of AI in their daily lives. Informed users are more likely to trust and use AI responsibly.

Balancing Innovation and Ethics

Innovation should never come at the expense of ethics.

As AI continues to revolutionize industries, it’s crucial to strike a balance between progress and the preservation of human values.

Ethical considerations must guide every step, ensuring that AI benefits society without causing harm.

For instance, prioritizing sustainability in AI development helps prevent overexploitation of resources.

FAQs

1. What makes AI content trustworthy?

Transparency, fairness, robustness, privacy, and accountability ensure AI content is reliable and ethical.

2. How does trustworthy AI content benefit society?

It fosters trust, encourages innovation, and ensures AI serves humanity responsibly.

3. What are the risks of untrustworthy AI content?

Misinformation, bias, and privacy violations can erode trust and harm users.

4. How can AI developers ensure fairness?

By using diverse training data and implementing rigorous bias mitigation strategies.

5. Why is transparency crucial in AI?

It helps users understand AI decisions, building trust and reducing uncertainty.

6. Are there any global standards for trustworthy AI?

Yes, frameworks like the EU’s Ethics Guidelines for Trustworthy AI provide global benchmarks.

7. What role does privacy play in AI content?

Ethical data handling ensures user information is protected, fostering trust and compliance.

Related Posts

Understanding AI Transparency for Better Trust and Accountability

Transparency in AI systems fosters trust by providing clear insights into decision-making processes.

Ethical AI Practices Made Easy: 7 Simple Steps for Success

Follow these 7 actionable steps to integrate ethical principles into AI development and use.

Building Trust in AI-Driven Search Engines: The Role of Authentic Content

Authentic content ensures search engines deliver accurate, trustworthy results, strengthening user confidence in AI.

How AI Content Earns Our Trust One Step at a Time

Trust in AI content grows as it consistently delivers reliable, accurate, and transparent information.

Conclusion

Trustworthy AI content is more than just a technical achievement; it’s a societal necessity that impacts every facet of our lives, from healthcare to education, business, and beyond.

As AI continues to evolve and gain power, the expectations we place on it grow exponentially.

By embracing key principles like transparency, fairness, and accountability, we can create a solid foundation for AI to truly benefit humanity in meaningful ways.

The question isn’t whether we need trustworthy AI—it’s how quickly we can make it a reality and ensure that it is built responsibly. Are we ready to collaborate across industries, governments, and communities to build a future where AI enriches our lives and empowers us all?

The time to act is now. Let’s seize this opportunity and make that future a reality.